Following up on our 2016 thread, Timothy B. Lee has a great piece up at Ars Technica on how the National Highway Traffic Safety Administration screwed up its analysis of the safety record of Tesla's badly named Autopilot. The mistakes are really embarrassing but perhaps the most disturbing part is the way that the NHTSA kowtowed to the very company it was supposed to be investigating.

In 2017, the feds said Tesla Autopilot cut crashes 40%—that was bogus

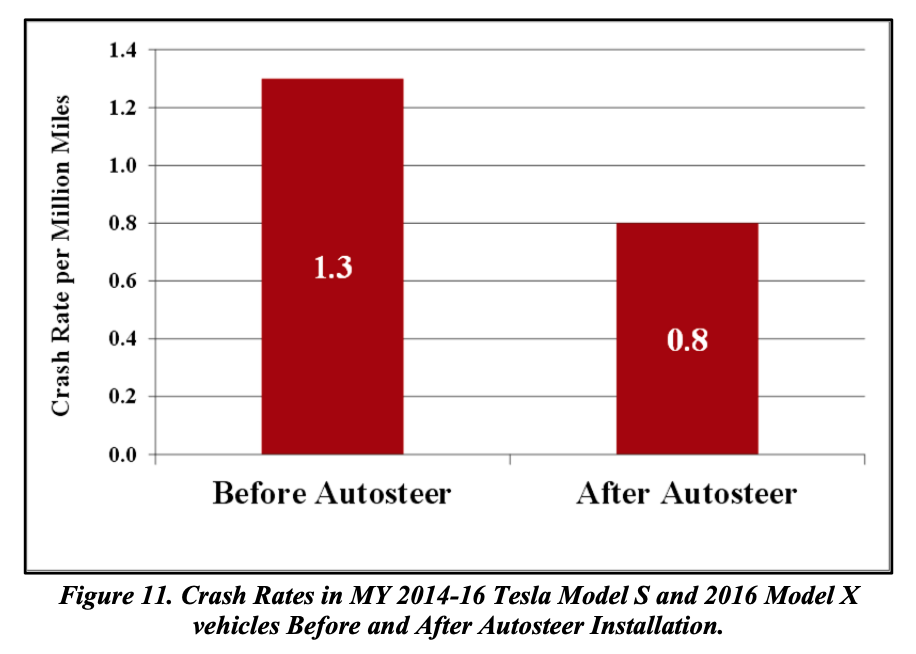

To compute a crash rate, you take the number of crashes and divide it by the number of miles traveled. NHTSA did this calculation twice—once for miles traveled before the Autosteer upgrade, and again for miles traveled afterward. NHTSA found that crashes were more common before Autosteer, and the rate dropped by 40 percent once the technology was activated.

In a calculation like this, it's important for the numerator and denominator to be drawn from the same set of data points. If the miles from a particular car aren't in the denominator, then crashes for that same car can't be in the numerator—otherwise the results are meaningless.

Yet according to QCS, that's exactly what NHTSA did. Tesla provided NHTSA with data on 43,781 vehicles, but 29,051 of these vehicles were missing data fields necessary to calculate how many miles these vehicles drove prior to the activation of Autosteer. NHTSA handled this by counting these cars as driving zero pre-Autosteer miles. Yet NHTSA counted these same vehicles as having 18 pre-Autosteer crashes—more than 20 percent of the 86 total pre-Autosteer crashes in the data set. The result was to significantly overstate Tesla's pre-Autosteer crash rate.

...

It's only possible to compute accurate crash rates for vehicles that have complete data and no gap between the pre-Autosteer and post-Autosteer odometer readings. Tesla's data set only included 5,714 vehicles like that. When QCS director Randy Whitfield ran the numbers for these vehicles, he found that the rate of crashes per mile increased by 59 percent after Tesla enabled the Autosteer technology.

So does that mean that Autosteer actually makes crashes 59 percent more likely? Probably not. Those 5,714 vehicles represent only a small portion of Tesla's fleet, and there's no way to know if they're representative. And that's the point: it's reckless to try to draw conclusions from such flawed data. NHTSA should have either asked Tesla for more data or left that calculation out of its report entirely.

NHTSA kept its data from the public at Tesla's behest

The misinformation in NHTSA's report could have been corrected much more quickly if NHTSA had chosen to be transparent about its data and methodology. QCS filed a Freedom of Information Act request for the data and methodology underlying NHTSA's conclusions in February 2017, about a month after the report was published. If NHTSA had supplied the information promptly, the problems with NHTSA's calculations would likely have been identified quickly. Tesla would not have been able to continue citing them more than a year after they were published.

Instead, NHTSA fought QCS' FOIA request after Tesla indicated that the data was confidential and would cause Tesla competitive harm if it was released. QCS sued the agency in July 2017. In September 2018, a federal judge rejected most of NHTSA's arguments, clearing the way for NHTSA to release the information to QCS late last year.

No comments:

Post a Comment