From an AT&T advertisement that ran in Scientific American. June 19th, 1909.

Even allowing for the inevitable hyperbole of ad copy, this is big talk, but it is highly indicative of its era. There was a widespread, perhaps even dominant belief among turn-of-the-century Americans that the generation of unprecedented technological progress they had just witnessed marked the beginning of a new age of civilization and perhaps even human evolution.

This was about the time that the idea of and unimaginable future coming at us with ever-increasing speed first took hold in the public consciousness. It was also perhaps the last time events justified the notion.

Comments, observations and thoughts from two bloggers on applied statistics, higher education and epidemiology. Joseph is an associate professor. Mark is a professional statistician and former math teacher.

Wednesday, February 7, 2018

Tuesday, February 6, 2018

"It’s not just the Second Avenue Subway"

Over at Citylab, Alon Levy has a great piece on the costs of passenger rail.

The approximate range of underground rail construction costs in continental Europe and Japan is between $100 million per mile, at the lowest end, and $1 billion at the highest. Most subway lines cluster in the range of $200 million to $500 million per mile; in Amsterdam, a six-mile subway line cost 3.1 billion Euros, or about $4 billion, after severe cost overruns, delays, and damage to nearby buildings. The Second Avenue Subway is unique even in the U.S. for its exceptionally high cost, but elsewhere, the picture is grim by European standards:

It is hard to find exact international comparisons for subway lines running in the medians of freeways. However, it should not be much more difficult to construct such lines than to build light rail. Indeed, in the study for Atlanta’s I-20 East extension, there are options for light rail and bus rapid transit, and the light rail option is only slightly cheaper than the heavy rail option, at $140 million per mile.

Line Type Cost Length Cost/mile San Francisco Central Subway Underground $1.57 billion 1.7 miles $920 million Los Angeles Regional Connector Underground $1.75 billion 1.9 miles $920 million Los Angeles Purple Line Phases 1-2 Underground $5.2 billion 6.5 miles $800 million BART to San Jose (proposed) 83% Underground $4.7 billion 6 miles $780 million Seattle U-Link Underground $1.8 billion 3 miles $600 million Honolulu Area Rapid Transit Elevated $10 billion 20 miles $500 million Boston Green Line Extension Trench $2.3 billion 4.7 miles $490 million Washington Metro Silver Line Phase 2 Freeway median $2.8 billion 11.5 miles $240 million Atlanta I-20 East Heavy Rail Freeway median $3.2 billion 19.2 miles $170 million

Lists of light-rail lines built in France in recent years can be found on French Wikipedia and Yonah Freemark’s The Transport Politic, and in articles in French media. The cheaper lines cost about $40 million per mile, the more expensive ones about $100 million.

In the United States, most recent and in-progress light-rail lines cost more than $100 million per mile. Two light-rail extensions in Minneapolis, the Blue Line Extension and the Southwest LRT, cost $120 million and $130 million per mile, respectively. Dallas’ Orange Line light rail, 14 miles long, cost somewhere between $1.3 billion and $1.8 billion. Portland’s Orange Line cost about $200 million per mile. Houston’s Green and Purple Lines together cost $1.3 billion for about 10 miles of light rail.

Monday, February 5, 2018

This is almost beyond parody

This is Joseph

From Duncan Black, we have this article:

Second, there is a huge implicit road subsidy here for these companies, presuming non-AVs would need to be banned as well. Roads that can only be used by corporations (not individuals) that appear to be supported by public taxation.

Three, doesn't this create perverse incentives to make public transportation less effective, via lobbying for weaker bus service, especially if AVs become more standard?

Four, isn't regulation intended to give private benefit to a public good a problem? Allowing an AV to only be operated by a collective seems to be an odd regulation. In what other area do we restrict items to use by corporations but ban private use? There must be some but . . .

Five, doesn't this likely end up with more non-AVs on the road, making the AVs less safe because they can't communicate with non-AVs?

It seems like a very self-serving idea as a ride sharing fleet. I am curious what benefits we have here that wouldn't apply to public transit?

From Duncan Black, we have this article:

And today, a coalition of companies—including Lyft, Uber, and Zipcar—officially announced that they were signing on to a 10-point set of “shared mobility principles for livable cities”—in other words, industry goals for making city transit infrastructure as pleasant, equitable, and clean as possible. Some of the 10 statements, like “we support people over vehicles,” are vague but positive. But the final one speaks loudly about what city roads of the future could look like: “that autonomous vehicles (AVs) in dense urban areas should be operated only in shared fleets.”

It’s a “very convenient” idea for the companies who are promoting it, says Don MacKenzie, an assistant professor of civil and environmental engineering at the University of Washington. “It is basically [tying] the success of these companies to the adoption of autonomous vehicles,” he says. In other words, putting this principle in action means that “if people want the benefits of AVs, they can only get that by using shared fleets.” (Worth noting that the concept only applies to dense urban areas.)Ok, the first thing here is that any possible argument for this approach for AVs is going to apply to public transit as well. Bus service would be greatly improved if many or most cars were banned or restricted from operation on city streets.

Second, there is a huge implicit road subsidy here for these companies, presuming non-AVs would need to be banned as well. Roads that can only be used by corporations (not individuals) that appear to be supported by public taxation.

Three, doesn't this create perverse incentives to make public transportation less effective, via lobbying for weaker bus service, especially if AVs become more standard?

Four, isn't regulation intended to give private benefit to a public good a problem? Allowing an AV to only be operated by a collective seems to be an odd regulation. In what other area do we restrict items to use by corporations but ban private use? There must be some but . . .

Five, doesn't this likely end up with more non-AVs on the road, making the AVs less safe because they can't communicate with non-AVs?

It seems like a very self-serving idea as a ride sharing fleet. I am curious what benefits we have here that wouldn't apply to public transit?

Friday, February 2, 2018

I think Uber might be using the hover-plane design for its proposed flying taxis.

As we mentioned before, fairly early in the 20th century, popular culture seem to have settled on the period roughly around 1890 as the end of the simple, leisurely-paced good old days, just before technological revolution sent the country hurtling into the future.

Just Imagine is a good example if not a good film (to be perfectly honest, I've never made it all the way through without skipping sizable chunks). The period of comparison is pushed back to 1880 – – probably in order to have a nice round hundred years before the main action set in 1980 – – but the rest of the formula is there.

El (short for Elmer) Brendel was a popular Swedish dialect comedian who was popular at the time (though not popular enough for either of his starring vehicles to turn a profit). These days he is remembered almost solely for this film which itself is remembered mainly for providing stock footage for Saturday morning serials like Flash Gordon and Buck Rogers.

It's worth checking out the first few minutes just for the art direction and then fast forwarding to around the 22 minute mark where Henry Ford's anti-Semitism provides the subject for a then topical joke.

Just Imagine is a good example if not a good film (to be perfectly honest, I've never made it all the way through without skipping sizable chunks). The period of comparison is pushed back to 1880 – – probably in order to have a nice round hundred years before the main action set in 1980 – – but the rest of the formula is there.

El (short for Elmer) Brendel was a popular Swedish dialect comedian who was popular at the time (though not popular enough for either of his starring vehicles to turn a profit). These days he is remembered almost solely for this film which itself is remembered mainly for providing stock footage for Saturday morning serials like Flash Gordon and Buck Rogers.

It's worth checking out the first few minutes just for the art direction and then fast forwarding to around the 22 minute mark where Henry Ford's anti-Semitism provides the subject for a then topical joke.

Thursday, February 1, 2018

Media Post: Game of Thrones and succession

This is Joseph

** SPOILERS**

As some readers may know, the TV show game of thrones has bypassed the book series a song of ice and fire by George RR Martin. In the process, they have also appeared to merge a number of characters together, to reduce complexity. In the process, however, they have undermined a key piece of the entire story.

The plot that starts the whole series off is the discovery that the children of Cersei Lannister are not the real children of the king, Robert Baratheon. As a result, they lack a blood claim to the iron throne. In parallel, we have the story of Daenerys Targaryen who is seeking to reclaim the iron throne because she is a relative of the deposed king. We also have a history of a rebellion where the king was overthrown and replaced by a relative (said Robert) who had a close blood claim on the throne. Finally. there is a hidden twist where the bastard son of Ned Stark, Jon Snow, is actually the legitimate son of the Targaryen prince and has a stronger claim to the throne than Daenerys.

So the whole plot is driven by blood claims and how they influence character's succession rights. It is also a medieval world and not a modern one -- the king needs the support of the nobles in order to raises armies and funds. These nobles, themselves, depend on succession for legitimacy.

In the last season there were some questionable succession moves. But for some of them I can justify how the claim comes into being. Cersei Lannister is a close relative (mother) of the last king and happens to be in charge of the regency at the time. It's not typical, but it happened with Catherine the Great (for example) where a marriage/parentage claim allowed an unexpected monarch. Same with the Queen of Thorns -- she is at least a close relative of the last head of house Tyrell. An odd succession choice but you can see how she could have been a compromise candidate.

But what is going on with Ellaria Sand? She leads a coup to kill the members of the ruling family of Dorne. She is the illegitimate girlfriend of the brother of the previous ruler. How does she end up in charge? Now I could imagine a sand snake in charge -- they are also illegitimate but are at least the daughters of Oberyn Martell. But Ellaria?

Ellaria then joins forces with Daenerys, whose only legitimate claim on the iron throne rests on blood succession. If you take that away then you have a foreign invader pursuing a grudge against the family of people who wronged her (it is obvious that Cersei, herself, had nothing to do with Robert's Rebellion and the major rebels are all dead).

Why would Daenerys undermine the major point in her favor (succession law) by allying with somebody who has no succession rights to her current position (inside her kingdom) and, at the very least, was suspiciously involved with the violent death of the previous ruler and heir. I mean both were cut down along with their bodyguard -- nobody is dumb enough to think that this is an accident.

What I think happened was that they merged Arianne Martel with Ellaria. If Arianne did this plot then that would be different. Without clear evidence that she was the murderer then she has the best claim to the throne (being the current heir). Ambiguity might preserve her position (think of Edward II and Edward III) and objectors would have to act fast to gather evidence. Nobles wouldn't see their succession rights being tainted.

But this adaption definitely requires some more thought and should have been staged better. Why not put Obara Sand in charge, as the closet blood relative to the now extinct house of Martell? It might not be extinct in the books, but at least in the show that would be an easy claim to make as they killed every Martell we met. As it is, without additional information, the succession of Ellaria Sand to the leadership of Dorne undermines the main force driving the political plot and reduces the coherence of the story.

This was not one of the changes that improved the show.

** SPOILERS**

As some readers may know, the TV show game of thrones has bypassed the book series a song of ice and fire by George RR Martin. In the process, they have also appeared to merge a number of characters together, to reduce complexity. In the process, however, they have undermined a key piece of the entire story.

The plot that starts the whole series off is the discovery that the children of Cersei Lannister are not the real children of the king, Robert Baratheon. As a result, they lack a blood claim to the iron throne. In parallel, we have the story of Daenerys Targaryen who is seeking to reclaim the iron throne because she is a relative of the deposed king. We also have a history of a rebellion where the king was overthrown and replaced by a relative (said Robert) who had a close blood claim on the throne. Finally. there is a hidden twist where the bastard son of Ned Stark, Jon Snow, is actually the legitimate son of the Targaryen prince and has a stronger claim to the throne than Daenerys.

So the whole plot is driven by blood claims and how they influence character's succession rights. It is also a medieval world and not a modern one -- the king needs the support of the nobles in order to raises armies and funds. These nobles, themselves, depend on succession for legitimacy.

In the last season there were some questionable succession moves. But for some of them I can justify how the claim comes into being. Cersei Lannister is a close relative (mother) of the last king and happens to be in charge of the regency at the time. It's not typical, but it happened with Catherine the Great (for example) where a marriage/parentage claim allowed an unexpected monarch. Same with the Queen of Thorns -- she is at least a close relative of the last head of house Tyrell. An odd succession choice but you can see how she could have been a compromise candidate.

But what is going on with Ellaria Sand? She leads a coup to kill the members of the ruling family of Dorne. She is the illegitimate girlfriend of the brother of the previous ruler. How does she end up in charge? Now I could imagine a sand snake in charge -- they are also illegitimate but are at least the daughters of Oberyn Martell. But Ellaria?

Ellaria then joins forces with Daenerys, whose only legitimate claim on the iron throne rests on blood succession. If you take that away then you have a foreign invader pursuing a grudge against the family of people who wronged her (it is obvious that Cersei, herself, had nothing to do with Robert's Rebellion and the major rebels are all dead).

Why would Daenerys undermine the major point in her favor (succession law) by allying with somebody who has no succession rights to her current position (inside her kingdom) and, at the very least, was suspiciously involved with the violent death of the previous ruler and heir. I mean both were cut down along with their bodyguard -- nobody is dumb enough to think that this is an accident.

What I think happened was that they merged Arianne Martel with Ellaria. If Arianne did this plot then that would be different. Without clear evidence that she was the murderer then she has the best claim to the throne (being the current heir). Ambiguity might preserve her position (think of Edward II and Edward III) and objectors would have to act fast to gather evidence. Nobles wouldn't see their succession rights being tainted.

But this adaption definitely requires some more thought and should have been staged better. Why not put Obara Sand in charge, as the closet blood relative to the now extinct house of Martell? It might not be extinct in the books, but at least in the show that would be an easy claim to make as they killed every Martell we met. As it is, without additional information, the succession of Ellaria Sand to the leadership of Dorne undermines the main force driving the political plot and reduces the coherence of the story.

This was not one of the changes that improved the show.

Wednesday, January 31, 2018

Alon vs. Elon

Alon Levy is a highly respect transportation blogger currently based in Paris. He's also perhaps the leading debunker of the Hyperloop (or more accurately, the “Hyperloop,” but that's a topic we've probably exhausted). When Brad Plumer writing in the Washington Post declared ‘There is no redeeming feature of the Hyperloop.’, the headline was a quote from Levy.

Recently, Levy took a close look at Musk's Boring Company and found the name remarkably apt. We'd previously expressed skepticism about Musk's claim, but it turns out we were being way too kind.

Levy's takedown is detailed but readable and I highly recommend going through it if you have any interest in what drives infrastructure costs. Rather than try to excerpt some of the more technical passages, I thought I'd stick with the following general but still sharp observation from the post.

Recently, Levy took a close look at Musk's Boring Company and found the name remarkably apt. We'd previously expressed skepticism about Musk's claim, but it turns out we were being way too kind.

Levy's takedown is detailed but readable and I highly recommend going through it if you have any interest in what drives infrastructure costs. Rather than try to excerpt some of the more technical passages, I thought I'd stick with the following general but still sharp observation from the post.

Americans hate being behind. The form of right-wing populism that succeeded in the United States made that explicit: Make America Great Again. Culturally, this exists outside populism as well, for example in Gordon Gekko’s greed is good speech, which begins, “America has become a second-rate power.” In the late 2000s, Americans interested in transportation had to embarrassingly admit that public transit was better in Europe and East Asia, especially in its sexiest form, the high-speed trains. Musk came in and offered something Americans craved: an American way to do better, without having to learn anything about what the Europeans and Asians do. Musk himself is from South Africa, but Americans have always been more tolerant of long-settled immigrants than of foreigners.

In the era of Trump, this kind of nationalism is often characterized as the domain of the uneducated: Trump did the best among non-college-educated whites, and cut into Democratic margins with low-income whites (regardless of education). But software engineers making $120,000 a year in San Francisco or Boston are no less nationalistic – their nationalism just takes a less vulgar form. Among the tech workers themselves, technical discussions are possible; some close-mindedly respond to every criticism with “they also laughed at SpaceX,” others try to engage (e.g. Hyperloop One). But in the tech press, the response is uniformly sycophantic: Musk is a genius, offering salvation to the monolingual American, steeped in the cultural idea of the outside inventor who doesn’t need to know anything about existing technology and can substitute personal intelligence and bravery.

In reality, The Boring Company offers nothing of this sort. It is in the awkward position of being both wrong and unoriginal: unoriginal because its mission of reducing construction costs from American levels has already been achieved, and wrong because its own ideas of how to do so range from trivial to counterproductive. It has good marketing, buoyed by the tech world’s desire to believe that its internal methods and culture can solve every problem, but it has no product to speak of. What it’s selling is not just wrong, but boringly so, without any potential for salvaging its ideas for something more useful.

Tuesday, January 30, 2018

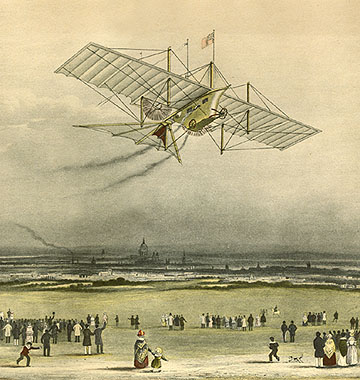

Steam-powered airplanes

I'm going to connect this up with some ongoing thread somewhere down the line, but for now I just thought I'd share this really cool list from Wikipedia of 19th-century experiments in steam-powered aircraft.

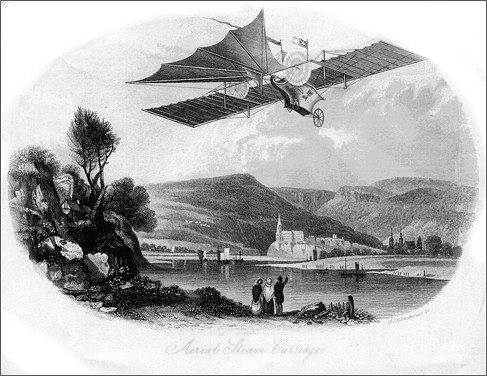

The Aerial Steam Carriage

The Henson Aerial Steam Carriage of 1843 (imaginary representation for an advertisement).

Patent drawing for the Henson Aerial Steam Carriage of 1843.

Ader Avion III

The Aerial Steam Carriage

The Henson Aerial Steam Carriage of 1843 (imaginary representation for an advertisement).

Patent drawing for the Henson Aerial Steam Carriage of 1843.

- 1842: The Aerial Steam Carriage of William Samuel Henson and John Stringfellow was patented, but was never successful, although a steam-powered model was flown in 1848.

- 1852: Henri Giffard flew a 3-horsepower (2 kW) steam-powered dirigible over Paris; it was the first powered aircraft.

- 1861 Gustave Ponton d'Amécourt made a small steam-powered craft, coining the name helicopter.

- 1874: Félix du Temple flew a steam-powered aluminium monoplane off a downhill run. While it did not achieve level flight, it was the first manned heavier-than-air powered flight.

- 1877: Enrico Forlanini built and flew a model steam-powered helicopter in Milan.

- 1882: Alexander Mozhaisky built a steam-powered plane but it did not achieve sustained flight. The engine from the plane is in the Central Air Force Museum in Monino, Moscow.

- 1890: Clément Ader built a steam-powered, bat-winged monoplane, named the Eole. Ader flew it on October 9, 1890, over a distance of 50 metres (160 ft), but the engine was inadequate for sustained and controlled flight. His flight did prove that a heavier-than-air flight was possible. Ader made at least three further attempts, the last two on 12 and 14 October 1897 for the French Ministry of War. There is controversy about whether or not he attained controlled flight. Ader did not obtain funding for his project, and that points to its probable failure.[1]

- 1894: Sir Hiram Stevens Maxim (inventor of the Maxim Gun) built and tested a large rail-mounted, steam-powered aircraft testbed, with a mass of 3.5 long tons (3.6 t) and a wingspan of 110 feet (34 m) in order to measure the lift produced by different wing configurations. The machine unexpectedly generated sufficient lift and thrust to break free of the test track and fly, but was never intended to be operated as a piloted aircraft and so crashed almost immediately owing to its lack of flight controls.

- 1896: Samuel Pierpont Langley successfully flew unpiloted steam-powered models.[2]

- 1897: Carl Richard Nyberg's Flugan developed steam-powered aircraft over a period from 1897 to 1922, but they never achieved more than a few short hops.

Ader Avion III

Monday, January 29, 2018

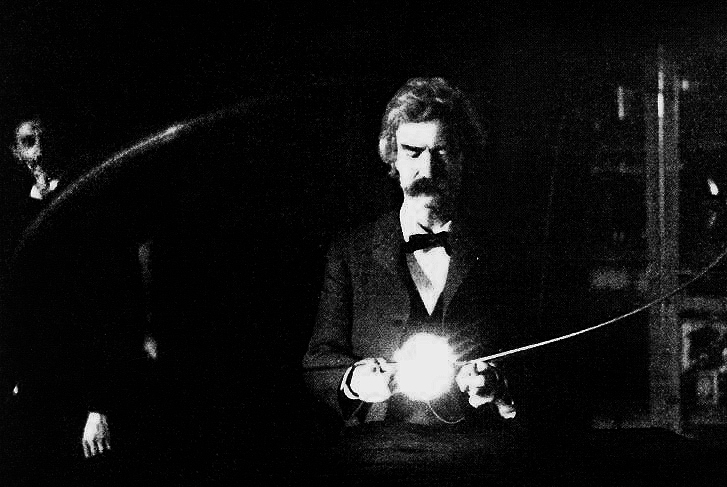

Tesla and the New York Times – – adding a historical component to the hype-and-bullshit tech narrative

This New York Times piece ["Tesla the Car Is a Household Name. Long Ago, So Was Nikola Tesla".

By John F. Wasik DEC. 30, 2017 ] is so bad it might actually be more useful than a better written article (such as this Smithsonian profile) would have been. Wasik provides us with an excellent example of the way writers distort and mythologize the history of technology in the service of cherished conventional narratives.

The standard tech narrative is one of great men leading us into a period of extraordinary, unprecedented progress, sometimes exciting, sometimes frightening, but always unimaginably big and just around the corner. In order to maintain this narrative, the magnitude and imminence of the recent advances is consistently overstated while the technological accomplishments and sophistication of the past is systematically understated. Credit for those advances that did happen is assigned to a handful of visionaries, many of the tragic, ahead-of-their-time variety.

These historical retcons tend to collapse quickly when held up against actual history. This is especially true here. Tesla always generated a great deal of sensationalistic coverage (more often than not intentionally), but more sober contemporary sources consistently viewed him as both a brilliant and important innovator and also an often flaky and grandiose figure, a characterization that holds up well to this day. This was the man who invented the induction motor; he was also the guy who claimed to hear interplanetary radio messages in his lab.

John F. Wasik plays the ahead-of-their-time card extensively throughout the article.

The trouble with this part of the narrative is that Tesla's Great White Whale (long-distance wireless power transmission) was simply a bad idea. It was not financially feasible because it was not feasible period. We've had over a century to consider the problem, not to mention more powerful tools and a far greater understanding of the underlying physical forces, and we can say with near absolute confidence that, even if long-distance wireless power transmission is developed in the future, it will not use Tesla's approach. No amount of funding, no degree of public support would have changed the outcome of this part of the story.

Just to be clear, even bad ideas can require brilliant engineering. That was certainly the case here, just as it was with other dead ends of the era such as mechanical televisions and steam powered aircraft. Even to this day, demonstrations of Tesla's system are impressive to watch.

Just as the if-only-he'd-had-the-money argument collapses under scrutiny, so does the lone-visionary-ahead-of-his-time narrative. If you dig through contemporary accounts, you'll find that Tesla was very much representative of the scientific and research community of his time in most ways. Both in terms of the work he was doing and the ideas he was formulating about the role of technology, he had lots of company.

Wasik consistently downplayed the work of Tesla's contemporaries, sometimes subtly (saying that the Supreme Court ruled in his favor in a 1943 patent dispute when in fact, he was one of three inventors who had their prior patents restored by the decision), sometimes in a comically over-the-top fashion as with this description of Tesla's remote-controlled torpedo.

Just to be clear, Tesla was doing important and impressive work, but as one of a number of researchers pushing the boundaries of the field of radio.

From Wikipedia:

The part about proposing the "development of torpedoes well before World War I" is even stranger. The self-propelled torpedo had been in service for more than 30 years by 1898, and remote-controlled torpedoes (using a mechanical system based on trailing cables, but still having considerable range and speed) had been in use for over 20.

It makes for a good story to credit Tesla as the lone visionary who came up with robotics and remote control by himself but was just too far ahead of his time to sell the world on that vision, but the truth is that lots of smart people were working along these lines (like Leonardo Torres y Quevedo) and pretty much everybody saw the potential value.

Tesla's ideas about remote-controlled torpedoes would take years to be implemented because it would take years for the technology to catch up with his rhetoric. You can read a very good contemporary account from scientific American that spelled out the issues.

We've talked a lot here at the blog about about the mythologizing and bullshit that are pervasive in the 21st century technology narrative. It's worth noting that those same popular but dangerously false narratives color our perception of the past as well.

By John F. Wasik DEC. 30, 2017 ] is so bad it might actually be more useful than a better written article (such as this Smithsonian profile) would have been. Wasik provides us with an excellent example of the way writers distort and mythologize the history of technology in the service of cherished conventional narratives.

The standard tech narrative is one of great men leading us into a period of extraordinary, unprecedented progress, sometimes exciting, sometimes frightening, but always unimaginably big and just around the corner. In order to maintain this narrative, the magnitude and imminence of the recent advances is consistently overstated while the technological accomplishments and sophistication of the past is systematically understated. Credit for those advances that did happen is assigned to a handful of visionaries, many of the tragic, ahead-of-their-time variety.

These historical retcons tend to collapse quickly when held up against actual history. This is especially true here. Tesla always generated a great deal of sensationalistic coverage (more often than not intentionally), but more sober contemporary sources consistently viewed him as both a brilliant and important innovator and also an often flaky and grandiose figure, a characterization that holds up well to this day. This was the man who invented the induction motor; he was also the guy who claimed to hear interplanetary radio messages in his lab.

John F. Wasik plays the ahead-of-their-time card extensively throughout the article.

He envisioned a system that could transmit not only radio but also electricity across the globe. After successful experiments in Colorado Springs in 1899, Tesla began building what he called a global “World System” near Shoreham on Long Island, hoping to power vehicles, boats and aircraft wirelessly. Ultimately, he expected that anything that needed electricity would get it from the air much as we receive transmitted data, sound and images on smartphones. But he ran out of money, and J. P. Morgan Jr., who had provided financing, turned off the spigot.

…

Tesla’s ambitions outstripped his financing. He didn’t focus on radio as a stand-alone technology. Instead, he conceived of entire systems, even if they were decades ahead of the time and not financially feasible.

Tesla failed to fully collaborate with well-capitalized industrial entities after World War I. His supreme abilities to conceptualize and create entire systems weren’t enough for business success. He didn’t manage to build successful alliances with those who could finance, build and scale up his creations.

Tesla’s achievements were awesome but incomplete. He created the A.C. energy system and the basics of radio communication and robotics but wasn’t able to bring them all to fruition. His life shows that even for a brilliant inventor, innovation doesn’t happen in a vacuum. It requires a broad spectrum of talents and skills. And lots of capital.

The trouble with this part of the narrative is that Tesla's Great White Whale (long-distance wireless power transmission) was simply a bad idea. It was not financially feasible because it was not feasible period. We've had over a century to consider the problem, not to mention more powerful tools and a far greater understanding of the underlying physical forces, and we can say with near absolute confidence that, even if long-distance wireless power transmission is developed in the future, it will not use Tesla's approach. No amount of funding, no degree of public support would have changed the outcome of this part of the story.

Just to be clear, even bad ideas can require brilliant engineering. That was certainly the case here, just as it was with other dead ends of the era such as mechanical televisions and steam powered aircraft. Even to this day, demonstrations of Tesla's system are impressive to watch.

[Tesla understood the value of celebrity.]

Just as the if-only-he'd-had-the-money argument collapses under scrutiny, so does the lone-visionary-ahead-of-his-time narrative. If you dig through contemporary accounts, you'll find that Tesla was very much representative of the scientific and research community of his time in most ways. Both in terms of the work he was doing and the ideas he was formulating about the role of technology, he had lots of company.

Wasik consistently downplayed the work of Tesla's contemporaries, sometimes subtly (saying that the Supreme Court ruled in his favor in a 1943 patent dispute when in fact, he was one of three inventors who had their prior patents restored by the decision), sometimes in a comically over-the-top fashion as with this description of Tesla's remote-controlled torpedo.

Shortly after filing a patent application in 1897 for radio circuitry, Tesla built and demonstrated a wireless, robotic boat at the old Madison Square Garden in 1898 and, again, in Chicago at the Auditorium Theater the next year. These were the first public demonstrations of a remote-controlled drone.

An innovation in the boat’s circuitry — his “logic gate” — became an essential steppingstone to semiconductors. [This is a somewhat controversial claim. We'll try to come back to this later – MP]

Tesla’s tub-shaped, radio-controlled craft heralded the birth of what he called a “teleautomaton”; later, the world would settle on the word robot. We can see his influence in devices ranging from “smart” speakers like Amazon’s Echo to missile-firing drone aircraft.

Tesla proposed the development of torpedoes well before World War I. These weapons eventually emerged in another form — launched from submarines.

Just to be clear, Tesla was doing important and impressive work, but as one of a number of researchers pushing the boundaries of the field of radio.

From Wikipedia:

In 1894, the first example of wirelessly controlling at a distance was during a demonstration by the British physicist Oliver Lodge, in which he made use of a Branly's coherer to make a mirror galvanometer move a beam of light when an electromagnetic wave was artificially generated. This was further refined by radio innovators Guglielmo Marconi and William Preece, at a demonstration that took place on December 12, 1896, at Toynbee Hall in London, in which they made a bell ring by pushing a button in a box that was not connected by any wires. In 1898 Nikola Tesla filed his patent, U.S. Patent 613,809, named Method of an Apparatus for Controlling Mechanism of Moving Vehicle or Vehicles, which he publicly demonstrated by radio-controlling a boat during an electrical exhibition at Madison Square Garden. Tesla called his boat a "teleautomaton"

The part about proposing the "development of torpedoes well before World War I" is even stranger. The self-propelled torpedo had been in service for more than 30 years by 1898, and remote-controlled torpedoes (using a mechanical system based on trailing cables, but still having considerable range and speed) had been in use for over 20.

It makes for a good story to credit Tesla as the lone visionary who came up with robotics and remote control by himself but was just too far ahead of his time to sell the world on that vision, but the truth is that lots of smart people were working along these lines (like Leonardo Torres y Quevedo) and pretty much everybody saw the potential value.

Tesla's ideas about remote-controlled torpedoes would take years to be implemented because it would take years for the technology to catch up with his rhetoric. You can read a very good contemporary account from scientific American that spelled out the issues.

We've talked a lot here at the blog about about the mythologizing and bullshit that are pervasive in the 21st century technology narrative. It's worth noting that those same popular but dangerously false narratives color our perception of the past as well.

Friday, January 26, 2018

Add Adam Conover to the list of replication bullies

[Spelling error corrected.]

Most people in the news media missed the joke when Jon Stewart took over the Daily Show, or, more accurately, saw a joke that wasn't there. It took them a while to realize that while Stewart, the cast, and the writers of the show were trying to be funny, they generally weren't kidding.

The Daily Show was in that sense a very serious response to the disastrous state of turn-of-the-millennium journalism. As bad as things are now, it is easy to forget how much worse they were in the period roughly defined by Whitewater, Bush V Gore, and build-up to the Iraq war. The best of the new voices, such as Josh Marshall, were just beginning to be heard. The New York Times was pretty much the same, but now its devotion to practices like false balance, blind adherence to conventional narratives, and Clinton derangement syndrome have grown increasingly controversial with the rest of the media with papers like the Washington Post aggressively pushing back. In 1999, the rest of the press corps was seeing who could be the most like the NYT rather than criticizing it.

When the true nature of Stewart's Daily Show finally began to dawn on people, there was considerable bad feeling among journalists, lots of grumbling that Stewart, Colbert, and the rest had forgotten their place. Colbert's performance at the White House Correspondents' Dinner in particular hit a huge nerve. The people in that ball room were perhaps the last to realize that the satirical bits were driven by genuine contempt for a profession that had gone to hell.

Stewart and Colbert focused on press criticism because it was a ripe target for satire but also because it was something that needed to be done (God knows hacks like David Carr and Jack Shafer were not up to the job). The next generation, John Oliver, Samantha Bee, and in his new role, Stephen Colbert have shifted more toward news presented with a satirical bent once again filling in gaps left by conventional journalism.

Go forward another iteration (and another literal generation) and you get Cracked and College Humor who have taken those same basic instincts and applied them to less topical subjects, often focusing more on education than news.

One common thread that runs through all of the shows over the past almost 20 years is a reaction against the polite toleration of bullshit that it come to dominate mainstream journalism. It is worth noting that Adam Conover squarely placed himself in the pro-replication camp and has made citing sources and acknowledging errors a prominent part of Adam Ruins Everything.

Most people in the news media missed the joke when Jon Stewart took over the Daily Show, or, more accurately, saw a joke that wasn't there. It took them a while to realize that while Stewart, the cast, and the writers of the show were trying to be funny, they generally weren't kidding.

The Daily Show was in that sense a very serious response to the disastrous state of turn-of-the-millennium journalism. As bad as things are now, it is easy to forget how much worse they were in the period roughly defined by Whitewater, Bush V Gore, and build-up to the Iraq war. The best of the new voices, such as Josh Marshall, were just beginning to be heard. The New York Times was pretty much the same, but now its devotion to practices like false balance, blind adherence to conventional narratives, and Clinton derangement syndrome have grown increasingly controversial with the rest of the media with papers like the Washington Post aggressively pushing back. In 1999, the rest of the press corps was seeing who could be the most like the NYT rather than criticizing it.

When the true nature of Stewart's Daily Show finally began to dawn on people, there was considerable bad feeling among journalists, lots of grumbling that Stewart, Colbert, and the rest had forgotten their place. Colbert's performance at the White House Correspondents' Dinner in particular hit a huge nerve. The people in that ball room were perhaps the last to realize that the satirical bits were driven by genuine contempt for a profession that had gone to hell.

Stewart and Colbert focused on press criticism because it was a ripe target for satire but also because it was something that needed to be done (God knows hacks like David Carr and Jack Shafer were not up to the job). The next generation, John Oliver, Samantha Bee, and in his new role, Stephen Colbert have shifted more toward news presented with a satirical bent once again filling in gaps left by conventional journalism.

Go forward another iteration (and another literal generation) and you get Cracked and College Humor who have taken those same basic instincts and applied them to less topical subjects, often focusing more on education than news.

One common thread that runs through all of the shows over the past almost 20 years is a reaction against the polite toleration of bullshit that it come to dominate mainstream journalism. It is worth noting that Adam Conover squarely placed himself in the pro-replication camp and has made citing sources and acknowledging errors a prominent part of Adam Ruins Everything.

Thursday, January 25, 2018

A Turn of the Century Childhood's End

This may go against conventional wisdom, but what we now call New Age beliefs (normally seen as children of the counter-culture) are largely a product of the late 19th and early 20th centuries. Parapsychology? Check. Ghosts and similar entities? Check. Fascination with paganism and arcane religions? Check. Space aliens? Check. And finally, the idea that humanity is on the verge of a huge evolutionary leap, a leap that might already be happening? Check.

The turn of the century probably also marked the peak respectability for these beliefs. It's difficult imagining something like this running in Scientific American in the 21sst century.

The turn of the century probably also marked the peak respectability for these beliefs. It's difficult imagining something like this running in Scientific American in the 21sst century.

Wednesday, January 24, 2018

Where quack medicine meets political corruption meets pyramid schemes

This piece from the LA Times' indispensable Michael Hiltzik this excellent New Yorker article by Rachel Monroe

And the consumers often aren't the only victims.

Orrin Hatch is leaving the Senate, but his deadliest law will live on – LA Times

Sen. Orrin Hatch (R-Utah) last made a public splash during the debate over the GOP's tax cut bill in December, when he threw a conniption over the suggestion that the bill would favor the wealthy (who will reap about 80% of its benefits by 2027).

Hatch subsequently announced his retirement from the Senate as of the end of this term, writing finis to his 40 years of service. In that time, he has shown himself to be a master of the down-is-up, wrong-is-right method of obfuscating his favors to rich patrons. That was especially the case with his sedulous defense for 20 years of his deadliest legislative achievement.

We're talking about the Dietary Supplement Health and Education Act of 1994, or DSHEA (pronounced "D-shay"). Hatch introduced DSHEA in collaboration with then-Sen. Tom Harkin (D-Iowa), but there was no doubt that it was chiefly his baby. The act all but eliminated government regulation of the dietary and herbal supplements industry. Henceforth, the Food and Drug Administration could not block a supplement from reaching market; the agency could only take action if it learned of health and safety problems with the product after the fact.

DSHEA, as it was written and as it was intended, facilitates the legal marketing of quackery.

...

The Government Accountability Office found the marketing of herbal supplements, especially to the elderly, to be rife with deceptive and dangerous advice; marketers were heard assuring customers that their products could cure disease and recommending combinations that were medically hazardous. The FDA told the GAO that, yes, those marketers shouldn't be saying these things, and they'd get right on it.

...

But one didn't have to drill down too deeply in the speech to discern what really drove the law's enactment. It wasn't the desire for "rational regulation," but that most common political drug of all, money. The dietary supplement industry had set up shop in Hatch's home state and plied him with pantsfuls of campaign cash; in 2010, for instance, Utah-based Xango LLC, which markets dietary supplements among other products, was Hatch's second-biggest contributor. (Herbalife ranked third.) Hatch's son, Scott, has worked as a lobbyist for the industry.

Thanks to DSHEA, the supplements industry grew from $9 billion in 1994 to more than $50 billion today. In Utah alone, it's worth more than $7 billion.

And the consumers often aren't the only victims.

Tuesday, January 23, 2018

The Grandiosity/Contribution Ratio

From Gizmodo [emphasis added]

Obviously, any time we can get some billionaire to commit hundreds of millions of dollars a year to important basic research, that's a good thing. This money will undoubtedly do a tremendous amount of good and it's difficult to see a major downside.

In terms of the rhetoric, however, it's useful to step back and put this into perspective. In absolute terms $3 billion, even spaced out over a decade, is a great deal of money, but in relative terms is it enough to move us significantly closer to Zuckerberg's "the big goal"? Consider that the annual budget of the NIH alone is around $35 billion. This means that Zuckerberg's initiative is promising to match a little bit less than 1% of NIH funding over the next 10 years.

From a research perspective, this is still a wonderful thing, but from a sociological perspective, it's yet another example of the hype-driven culture of Silicon Valley and what I've been calling the magical heuristics associated with it. Two of the heuristics we've mentioned before were the magic of language and the magic of will. When a billionaire, particularly a tech billionaire, says something obviously, even absurdly exaggerated, the statement is often given more rather than less weight. The unbelievable claims are treated less as descriptions of the world as it is and more incantations to help the billionaires will a new world into existence.

Perhaps the most interesting part of Zuckerberg's language here is that it reminds us just how much the Titans of the Valley have bought into their own bullshit.

Zuck and Priscilla laid out the schematics for this effort on Facebook Live. The plan will be part of the Chan Zuckerberg Initiative and will be called simply “Chan Zuckerberg Science.” The goal, Zuck said, is to “cure, prevent, or manage all diseases in our children’s lifetime.” The project will bring together a bunch of scientists, engineers, doctors, and other experts in an attempt to rid the world of disease.

“We want to dramatically improve every life in [our daughter] Max’s generation and make sure we don’t miss a single soul,” Chan said.

Zuck explained that the Chan Zuckerberg Initiative will work in three ways: bring scientists and engineers together; build tools to “empower” people around the world; and promote a “movement” to fund science globally. The shiny new venture will receive $3 billion in funds over the next decade.

...

“Can we cure prevent or manage all diseases in our children’s lifetime?” Zuck asked at one point. “This is a big goal,” he said soon after, perhaps answering his own question.

Obviously, any time we can get some billionaire to commit hundreds of millions of dollars a year to important basic research, that's a good thing. This money will undoubtedly do a tremendous amount of good and it's difficult to see a major downside.

In terms of the rhetoric, however, it's useful to step back and put this into perspective. In absolute terms $3 billion, even spaced out over a decade, is a great deal of money, but in relative terms is it enough to move us significantly closer to Zuckerberg's "the big goal"? Consider that the annual budget of the NIH alone is around $35 billion. This means that Zuckerberg's initiative is promising to match a little bit less than 1% of NIH funding over the next 10 years.

From a research perspective, this is still a wonderful thing, but from a sociological perspective, it's yet another example of the hype-driven culture of Silicon Valley and what I've been calling the magical heuristics associated with it. Two of the heuristics we've mentioned before were the magic of language and the magic of will. When a billionaire, particularly a tech billionaire, says something obviously, even absurdly exaggerated, the statement is often given more rather than less weight. The unbelievable claims are treated less as descriptions of the world as it is and more incantations to help the billionaires will a new world into existence.

Perhaps the most interesting part of Zuckerberg's language here is that it reminds us just how much the Titans of the Valley have bought into their own bullshit.

Monday, January 22, 2018

Arthur C Clarke and the futurist's inflection point

Clarke circa 1964:

I can't quite recommend Paul Collins' recent New Yorker piece about the book Toward the Year 2018. Collins doesn't bring a lot of fresh insight to the subject (if you want a deeper understanding of how people in the past looked at what was formerly the future, stick with Gizmodo's Paleofuture), but it did turn me on to what appears to be a fascinating book (I'll let you know in a few days) which provides a great jumping off point for a discussion I've been meaning to have for a while.

[Though it's a bit off-topic, I have to take a moment to push back against the "near-heresy" comment. Though most people probably assumed manned space exploration would have more of a future after '68, and it certainly would've gone farther had LBJ run for and won a second term (Johnson had been space exploration's biggest champion dating back to his days in the Senate), but the program had always been controversial. "Can't we find better ways to spend that money here on earth?" was a common refrain from both the left and the right.]

As you go through the predictions listed here, you'll notice that they range from the reasonably accurate to the wildly overoptimistic or, perhaps overly pessimistic, depending on your feelings toward weaponized hurricanes (let's just go with ambitious). This matches up fairly closely to what you find in Arthur C Clarke's video essay of a few years earlier, parts that seem prescient while others come off as something from that months issue of Galaxy Magazine.

It's important to step back and remember that it didn't used to be like that. If you had gone back 20, 50, one hundred years, and asked experts to predict what was coming and how soon we get here, you almost certainly would have gotten many answers that seriously underestimated upcoming technological developments. If anything, the overly conservative would probably have outweighed the overly ambitious.

The 60s seemed to be the point when our expectations started exceeding our future. I have some theories as to why Clarke's advice for prognostication stopped working, but they'll have to wait till another post.

Trying to predict the future is a discouraging and hazardous occupation because the prophet invariably falls between two stools. If his predictions sound at all reasonable, you can be quite sure that within 20 or, at most, 50 years, the progress of science and technology has made him seem ridiculously conservative. On the other hand, if by some miracle a prophet could describe the future exactly as it was going to take place, his predictions would sound so absurd, so far-fetched, that everybody would laugh him to scorn. This has proved to be true in the past, and it will inevitably be true, even more so, of the century to come.

The only thing we can be sure of about the future is that it will be absolutely fantastic.

So, if what I say to you now seems to be very reasonable, then I'll have failed completely. Only if what I tell you appears absolutely unbelievable, have we any chance of visualizing the future as it really will happen.

I can't quite recommend Paul Collins' recent New Yorker piece about the book Toward the Year 2018. Collins doesn't bring a lot of fresh insight to the subject (if you want a deeper understanding of how people in the past looked at what was formerly the future, stick with Gizmodo's Paleofuture), but it did turn me on to what appears to be a fascinating book (I'll let you know in a few days) which provides a great jumping off point for a discussion I've been meaning to have for a while.

If you wanted to hear the future in late May, 1968, you might have gone to Abbey Road to hear the Beatles record a new song of John Lennon’s—something called “Revolution.” Or you could have gone to the decidedly less fab midtown Hilton in Manhattan, where a thousand “leaders and future leaders,” ranging from the economist John Kenneth Galbraith to the peace activist Arthur Waskow, were invited to a conference by the Foreign Policy Association. For its fiftieth anniversary, the F.P.A. scheduled a three-day gathering of experts, asking them to gaze fifty years ahead. An accompanying book shared the conference’s far-off title: “Toward the Year 2018.”

…

“MORE AMAZING THAN SCIENCE FICTION,” proclaims the cover, with jacket copy envisioning how “on a summer day in the year 2018, the three-dimensional television screen in your living room” flashes news of “anti-gravity belts,” “a man-made hurricane, launched at an enemy fleet, [that] devastates a neutral country,” and a “citizen’s pocket computer” that averts an air crash. “Will our children in 2018 still be wrestling,” it asks, “with racial problems, economic depressions, other Vietnams?”

Much of “Toward the Year 2018” might as well be science fiction today. With fourteen contributors, ranging from the weapons theorist Herman Kahn to the I.B.M. automation director Charles DeCarlo, penning essays on everything from “Space” to “Behavioral Technologies,” it’s not hard to find wild misses. The Stanford wonk Charles Scarlott predicts, exactly incorrectly, that nuclear breeder reactors will move to the fore of U.S. energy production while natural gas fades. (He concedes that natural gas might make a comeback—through atom-bomb-powered fracking.) The M.I.T. professor Ithiel de Sola Pool foresees an era of outright control of economies by nations—“They will select their levels of employment, of industrialization, of increase in GNP”—and then, for good measure, predicts “a massive loosening of inhibitions on all human impulses save that toward violence.” From the influential meteorologist Thomas F. Malone, we get the intriguing forecast of “the suppression of lightning”—most likely, he figures, “by the late 1980s.”

But for every amusingly wrong prediction, there’s one unnervingly close to the mark. It’s the same Thomas Malone who, amid predictions of weaponized hurricanes, wonders aloud whether “large-scale climate modification will be effected inadvertently” from rising levels of carbon dioxide. Such global warming, he predicts, might require the creation of an international climate body with “policing powers”—an undertaking, he adds, heartbreakingly, that should be “as nonpolitical as possible.” Gordon F. MacDonald, a fellow early advocate on climate change, writes a chapter on space that largely shrugs at manned interplanetary travel—a near-heresy in 1968—by cannily observing that while the Apollo missions would soon exhaust their political usefulness, weather and communications satellites would not. “A global communication system . . . would permit the use of giant computer complexes,” he adds, noting the revolutionary potential of a data bank that “could be queried at any time.”

[Though it's a bit off-topic, I have to take a moment to push back against the "near-heresy" comment. Though most people probably assumed manned space exploration would have more of a future after '68, and it certainly would've gone farther had LBJ run for and won a second term (Johnson had been space exploration's biggest champion dating back to his days in the Senate), but the program had always been controversial. "Can't we find better ways to spend that money here on earth?" was a common refrain from both the left and the right.]

As you go through the predictions listed here, you'll notice that they range from the reasonably accurate to the wildly overoptimistic or, perhaps overly pessimistic, depending on your feelings toward weaponized hurricanes (let's just go with ambitious). This matches up fairly closely to what you find in Arthur C Clarke's video essay of a few years earlier, parts that seem prescient while others come off as something from that months issue of Galaxy Magazine.

It's important to step back and remember that it didn't used to be like that. If you had gone back 20, 50, one hundred years, and asked experts to predict what was coming and how soon we get here, you almost certainly would have gotten many answers that seriously underestimated upcoming technological developments. If anything, the overly conservative would probably have outweighed the overly ambitious.

The 60s seemed to be the point when our expectations started exceeding our future. I have some theories as to why Clarke's advice for prognostication stopped working, but they'll have to wait till another post.

Friday, January 19, 2018

Removing the senate

This is Joseph

I normally have great respect for Ezra Klein. His stuff is awesome and I always click on his articles. Which is why this article annoyed me.

Consider the proposal:

Bennet has introduced the “Shutdown Accountability Resolution.” The effect would be that from the moment a shutdown starts, most members of the Senate would be forced to remain in the Senate chambers from 8 am to midnight, all day, every day. No weekends. No fundraisers. No trips home to see their families or constituents.

The proposal would not, itself, resolve the DREAMer debate that’s driving the federal government toward shutdown. But it would give the senators involved a powerful incentive to find a solution. This is a body that typically comes together in Washington a few days a week for only part of the year. The last thing they want is to be tied to the Senate floor day after day, for weeks or months on end.

Here’s how the resolution works: It would change Senate rules so that following a lapse in funding for one or more federal agencies — the technical meaning of a shutdown — the Senate must convene at 8 am the next day. Upon convening, the presiding officer forces a quorum call to see who’s present.

In the absence of a quorum, the Senate moves to a roll call vote demanding the attendance of absent senators. If a sufficient number are absent, the sergeant at arms will be asked to arrest them. This process is repeated every hour between 8 am and midnight until a bill passes reopening the government.

The result is that senators need to remain on or near the Senate floor for the duration of the shutdown. They can’t go wait it out in the comfort of their own home.Perhaps they omitted the piece where the house of representatives is also penalized. But a budget needs to be passed by both the House and the Senate, right? So how does this prevent the strategy of the House passing a budget and then leaving for six weeks? They aren't required to be present 8 am to midnight every day. I read the whole thing and it seems awfully specific to senators.

So if the house passes something then the senate can rubber stamp it, or being sitting around until they do. House members can be on the golf course.

After all, if the senators make an agreement on a budget, doesn't it have to pass the house as well?

It also hides the real story, which is that budget reconciliation would let a budget be passed with 50 votes. There was a decision here to put a priority on tax cuts without working out a budget at the same time. The idea that they would need to compromise now was baked into using the previous strategy for a tax cut. But it doesn't help to then make the senate a hostage of the house.

Similarily, what happens if a president vetoes the budget? Punishing senators for other people's actions seems to result in a stable outcome of making the senate impotent. Now this could be the goal, but that seems like a different conversation (should there be a senate).

Feel kind of bad about making fun of Soylent now

Remind me to throw in some raw water jokes next time I write something about the culture of Silicon Valley.

Subscribe to:

Posts (Atom)