Here are two beautiful French planes from 1909. Back tomorrow with actual prose.

And a few impressive airships.

Comments, observations and thoughts from two bloggers on applied statistics, higher education and epidemiology. Joseph is an associate professor. Mark is a professional statistician and former math teacher.

Thursday, November 30, 2017

Tuesday, November 28, 2017

Belated Tuesday Tweets

“Newsworthy” implies “demands coverage,” not “deserves platform.”— Mark Palko (@MarkPalko1) November 13, 2017

Under its existing model, Uber's competitive advantage is mainly based on cheap nonunion labor providing its own vehicles. Under this model, Uber has no special advantage while competitors from Amazon to Google to car rental companies to auto manufacturers are better positioned.— Mark Palko (@MarkPalko1) November 13, 2017

Josh missed the most important point, Forget racist, conspiracy theorist and fabulist. Cernovich first came to fame through the men's rights movement. Misogynists have now weaponized the backlash against harassment.— Mark Palko (@MarkPalko1) November 21, 2017

True, but those decisions were made before false balance and Clinton derangement syndrome helped put Trump in the WH. This shows the NYT has learned nothing from its mistakes. @jayrosen_nyu— Mark Palko (@MarkPalko1) November 13, 2017

Of course, if the New York Times had followed the Washington Post's lead instead of doubling down on false balance, we might not have a situation they all need to rise to.— Mark Palko (@MarkPalko1) November 20, 2017

Substitute 50s and Russian for 80s and Japanese and work in Sputnik...https://t.co/JdzsgDyDNX— Mark Palko (@MarkPalko1) November 22, 2017

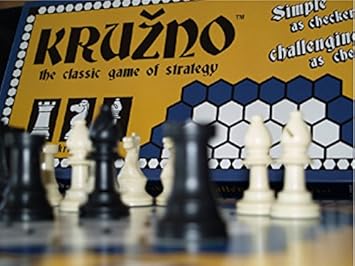

Kruzno

Despite the pieces (I decided to go with something off the shelf for the prototype), it's not a chess variant. Instead, it's a capture-and-evade abstract strategy game where the rank of the pieces is non-transitive. The rules are simple enough for a small child to play but challenging enough to keep reasonably competent chess players on their toes. It is also the official game of a small village in Slovakia, but that's a story for another time.

Between now and Christmas, I'll be writing some posts on game design and possibly sharing some thoughts and cautionary tales about jumping into a small business with no relevant experience. In the meantime, I still have some of that first run up for sale on Amazon. Go by and check it out.

Monday, November 27, 2017

Another Tesla article from the Scientific American archives (along with some very cool train pictures).

19th Century drawing of a Geissler tubes.

This story mainly stands on its own but there are a couple points I want to emphasize. First, it's a nice example of that distinctive Tesla combination: important innovator; savvy showmen; extravagantly over-promising flake. That's not just something that shows in retrospect; you can see it coming through contemporary accounts. Sober observers were well aware of all of these facets. The man was capable of amazing advances; he also reported getting messages from Mars.

I also like the way the story knocks down a false but persistent trope. There's a tendency to treat earlier generations as innocent and unimaginative, often oblivious to the extraordinary events that were starting to unfold. Call it the "little did they imagine" genre. The trouble is it's almost entirely wrong. Particularly in America, people in the late 19th and early 20th centuries saw themselves as living in a wondrous time and they tended to greet new developments with great excitement, if anything, often overestimating the degree to which their world was about to change.

Reading through these old articles, one thing that strikes me is that wildly ambitious ideas were not accepted unskeptically, but they weren't treated dismissively either. People seemed to get that an idea could fail miserably and still be of great value.

This story mainly stands on its own but there are a couple points I want to emphasize. First, it's a nice example of that distinctive Tesla combination: important innovator; savvy showmen; extravagantly over-promising flake. That's not just something that shows in retrospect; you can see it coming through contemporary accounts. Sober observers were well aware of all of these facets. The man was capable of amazing advances; he also reported getting messages from Mars.

I also like the way the story knocks down a false but persistent trope. There's a tendency to treat earlier generations as innocent and unimaginative, often oblivious to the extraordinary events that were starting to unfold. Call it the "little did they imagine" genre. The trouble is it's almost entirely wrong. Particularly in America, people in the late 19th and early 20th centuries saw themselves as living in a wondrous time and they tended to greet new developments with great excitement, if anything, often overestimating the degree to which their world was about to change.

Reading through these old articles, one thing that strikes me is that wildly ambitious ideas were not accepted unskeptically, but they weren't treated dismissively either. People seemed to get that an idea could fail miserably and still be of great value.

Friday, November 24, 2017

This is what a good job market looks like.

In the postwar era, employers (particularly what we would now call the stem fields) were genuinely hungry. They were willing to be flexible about qualifications and creative about recruiting.

What's My Line? (1950–1967)

Episode dated 20 February 1955

What's My Line? (1950–1967)

Episode dated 20 February 1955

Thursday, November 23, 2017

"As God as my witness..." is my second favorite Thanksgiving episode line [Repost]

If you watch this and you could swear you remember Johnny and Mr. Carlson discussing Pink Floyd, you're not imagining things. Hulu uses the DVD edit which cuts out almost all of the copyrighted music. .

As for my favorite line, it comes from the Buffy episode "Pangs" and it requires a bit of a set up (which is a pain because it makes it next to impossible to work into a conversation).

Buffy's luckless friend Xander had accidentally violated a native American grave yard and, in addition to freeing a vengeful spirit, was been cursed with all of the diseases Europeans brought to the Americas.

Spike: I just can't take all this mamby-pamby boo-hooing about the bloody Indians.

Willow: Uh, the preferred term is...

Spike: You won. All right? You came in and you killed them and you took their land. That's what conquering nations do. It's what Caesar did, and he's not goin' around saying, "I came, I conquered, I felt really bad about it." The history of the world is not people making friends. You had better weapons, and you massacred them. End of story.

Buffy: Well, I think the Spaniards actually did a lot of - Not that I don't like Spaniards.

Spike: Listen to you. How you gonna fight anyone with that attitude?

Willow: We don't wanna fight anyone.

Buffy: I just wanna have Thanksgiving.

Spike: Heh heh. Yeah... Good luck.

Willow: Well, if we could talk to him...

Spike: You exterminated his race. What could you possibly say that would make him feel better? It's kill or be killed here. Take your bloody pick.

Xander: Maybe it's the syphilis talking, but, some of that made sense.

Wednesday, November 22, 2017

Igon values, superstar coin flippers, and the Gladwell problem

Malcolm Gladwell has started coming up in quite a few major threads and larger pieces, so I decided I needed to get up to speed on some of the controversies involving the author. Some of the more substantial have centered around what Steven Pinker has called the Igon value problem

From Pinker's review of "What the Dog Saw"

Gladwell got the best of the follow up exchange, dismissing “igon value” as a spelling error while getting Pinker sucked into a bunch of secondary or even tertiary arguments. (One of the best indicators of intelligence is the ability to avoid discussions about the heritability of intelligence.)

The spelling error defense is technically correct but it misrepresents the main point of the criticism. First off, on a really basic level, this indicates poor fact checking on the part of Mr. Gladwell and the New Yorker. Even working under the relatively low standards of the blogosphere, I always try to Google unfamiliar phrases before quoting them. You'd think that the editors of America's most distinguished magazine would do at least that much.

More importantly, spelling errors fall in two basic categories. The first does not tell us anything substanitive about the writer. Given the ghoti insanity of the English language, being a bad speller does not necessarily imply a weak vocabulary or poor mastery of the language (put another way, not knowing whether it's double C or double S in "necessarily"does not necessarily suggest that you don't know what "necessarily" means). There are, however, cases (particularly involving transcription) where spelling errors can indicate that the writer is unfamiliar with the words in question. That appears to be the case here.

Pinker's central criticism largely boils down to phonetic reporting. Gladwell often goes into stories with a weak grasp of the field in question, as a result he frequently makes serious mistakes, constantly misses important subtleties, and is almost completely dependent on his subjects for understanding and context. Add to this poor fact checking and a disturbing nonchalance about getting the story right, and things can get ugly quickly.

Somewhat ironically, Gladwell hit back at Pinker for employing one of the same techniques which Gladwell is so proud of, picking a detail that told a good story and memorably illustrated a larger idea. The Igon Value Problem worked beautifully on those terms but it was far from the most serious or conclusive example available, even if we limit ourselves to the single article in question, "Blowing Up."

For example, the piece is very much invested in the idea of Taleb as Wall Street revolutionary. We could quibble about just how radical the Black Swan ideas and strategies are, but it is an entirely defensible interpretation. Unfortunately, Gladwell doesn't really understand which ideas are debatably new and which are familiar to anyone in finance. Here's an excerpt (starting and ending mid-paragraph):

But of course, Taleb didn't have to "do the arithmetic in his head" because, like virtually everyone else on Wall Street, he had probably read almost the same analogy in a famous passage from A Random Walk Down Wall Street by Burton Malkiel [transcribed via Dragon so beware of homonyms]:

(I love that second paragraph. Pretty much any time I flip past CNBC it comes flooding back to mind.)

Malkiel published this book in 1973 and though it more than ruffled a few feathers, it quickly became one of the seminal books on investing. Malcolm Gladwell's New Yorker piece came out 25 years later.

None of this is meant to imply any kind of deliberate plagiarism. Quite the opposite. I very much doubt that Gladwell realized he was paraphrasing a well-known passage. What I strongly suspect happened was that Taleb cited this in an interview as a standard example that everyone would be familiar with, sort of like describing a situation as a "frog in boiling water."

Gladwell's unacknowledged paraphrase is yet another indication that he didn't understand the strange role that economic theory and particularly market efficiency (in this case the semi-strong variety) plays on Wall Street, a role that was central to his narrative. This would be bad enough if he was just shooting for a straightforward profile, but Gladwell insists on playing the deep thinker, making pseudo-profound points, even closing with a grand sweeping moral about human nobility:

Gladwell loves to tell what Christopher Chabris has termed "just-so stories," cute little fables counterintuitive and surprising enough to catch the eye but neat and simple enough to go down easy. Paradoxically, pulling off that sort of simplicity requires that the writer have a deep and subtle understanding of his or her subject. Simplifying a subject you don't understand never goes well.

From Pinker's review of "What the Dog Saw"

An eclectic essayist is necessarily a dilettante, which is not in itself a bad thing. But Gladwell frequently holds forth about statistics and psychology, and his lack of technical grounding in these subjects can be jarring. He provides misleading definitions of “homology,” “sagittal plane” and “power law” and quotes an expert speaking about an “igon value” (that’s eigenvalue, a basic concept in linear algebra). In the spirit of Gladwell, who likes to give portentous names to his aperçus, I will call this the Igon Value Problem: when a writer’s education on a topic consists in interviewing an expert, he is apt to offer generalizations that are banal, obtuse or flat wrong.

Gladwell got the best of the follow up exchange, dismissing “igon value” as a spelling error while getting Pinker sucked into a bunch of secondary or even tertiary arguments. (One of the best indicators of intelligence is the ability to avoid discussions about the heritability of intelligence.)

The spelling error defense is technically correct but it misrepresents the main point of the criticism. First off, on a really basic level, this indicates poor fact checking on the part of Mr. Gladwell and the New Yorker. Even working under the relatively low standards of the blogosphere, I always try to Google unfamiliar phrases before quoting them. You'd think that the editors of America's most distinguished magazine would do at least that much.

More importantly, spelling errors fall in two basic categories. The first does not tell us anything substanitive about the writer. Given the ghoti insanity of the English language, being a bad speller does not necessarily imply a weak vocabulary or poor mastery of the language (put another way, not knowing whether it's double C or double S in "necessarily"does not necessarily suggest that you don't know what "necessarily" means). There are, however, cases (particularly involving transcription) where spelling errors can indicate that the writer is unfamiliar with the words in question. That appears to be the case here.

Pinker's central criticism largely boils down to phonetic reporting. Gladwell often goes into stories with a weak grasp of the field in question, as a result he frequently makes serious mistakes, constantly misses important subtleties, and is almost completely dependent on his subjects for understanding and context. Add to this poor fact checking and a disturbing nonchalance about getting the story right, and things can get ugly quickly.

Somewhat ironically, Gladwell hit back at Pinker for employing one of the same techniques which Gladwell is so proud of, picking a detail that told a good story and memorably illustrated a larger idea. The Igon Value Problem worked beautifully on those terms but it was far from the most serious or conclusive example available, even if we limit ourselves to the single article in question, "Blowing Up."

For example, the piece is very much invested in the idea of Taleb as Wall Street revolutionary. We could quibble about just how radical the Black Swan ideas and strategies are, but it is an entirely defensible interpretation. Unfortunately, Gladwell doesn't really understand which ideas are debatably new and which are familiar to anyone in finance. Here's an excerpt (starting and ending mid-paragraph):

There was just one problem, however, and it is the key to understanding the strange path that Nassim Taleb has chosen, and the position he now holds as Wall Street's principal dissident. Despite his envy and admiration, he did not want to be Victor Niederhoffer -- not then, not now, and not even for a moment in between. For when he looked around him, at the books and the tennis court and the folk art on the walls -- when he contemplated the countless millions that Niederhoffer had made over the years -- he could not escape the thought that it might all have been the result of sheer, dumb luck.

Taleb knew how heretical that thought was. Wall Street was dedicated to the principle that when it came to playing the markets there was such a thing as expertise, that skill and insight mattered in investing just as skill and insight mattered in surgery and golf and flying fighter jets.

...

For Taleb, then, the question why someone was a success in the financial marketplace was vexing. Taleb could do the arithmetic in his head. Suppose that there were ten thousand investment managers out there, which is not an outlandish number, and that every year half of them, entirely by chance, made money and half of them, entirely by chance, lost money. And suppose that every year the losers were tossed out, and the game replayed with those who remained. At the end of five years, there would be three hundred and thirteen people who had made money in every one of those years, and after ten years there would be nine people who had made money every single year in a row, all out of pure luck.

But of course, Taleb didn't have to "do the arithmetic in his head" because, like virtually everyone else on Wall Street, he had probably read almost the same analogy in a famous passage from A Random Walk Down Wall Street by Burton Malkiel [transcribed via Dragon so beware of homonyms]:

Perhaps the laws of chance should be illustrated. Let's engage in a coin flipping contest. Those who can consistently flip heads will be declared winners. The contest begins and 1,000 contestants flip coins. Just as would be expected by chance, 500 of them flip heads and these winners are allowed to advance to the second stage of the contest and flip again. As might be expected, 250 flip heads. Operating under the laws of chance, there will be 125 winners in the third round, the three in the fourth, 31 in the fifth, 16 in the sixth, and 8 in the seventh.

By this time, crowds start to gather to witness the surprising ability of these expert coin-flippers. The winners are overwhelmed with adulation. They are celebrated as geniuses in the art of coin-flipping, their biographies are written, and people urgently seek their advice. After all, there were 1000 contestants and only eight could consistently flip heads. The game continues and some contestants eventually flip heads nine and ten times in a row. [* If we had let the losers continue to play (as mutual fund managers do, even after a bad year), we would have found several more contestants who flipped eight or nine ads out of 10 and were therefore regarded as expert coin-flippers.] The point of this analogy is not to indicate that investment-fund managers can or should make their decisions by flipping coins, but that the laws of chance do operate and that they can explain some amazing success stories.

(I love that second paragraph. Pretty much any time I flip past CNBC it comes flooding back to mind.)

Malkiel published this book in 1973 and though it more than ruffled a few feathers, it quickly became one of the seminal books on investing. Malcolm Gladwell's New Yorker piece came out 25 years later.

None of this is meant to imply any kind of deliberate plagiarism. Quite the opposite. I very much doubt that Gladwell realized he was paraphrasing a well-known passage. What I strongly suspect happened was that Taleb cited this in an interview as a standard example that everyone would be familiar with, sort of like describing a situation as a "frog in boiling water."

Gladwell's unacknowledged paraphrase is yet another indication that he didn't understand the strange role that economic theory and particularly market efficiency (in this case the semi-strong variety) plays on Wall Street, a role that was central to his narrative. This would be bad enough if he was just shooting for a straightforward profile, but Gladwell insists on playing the deep thinker, making pseudo-profound points, even closing with a grand sweeping moral about human nobility:

“That is the lesson of Taleb and Niederhoffer, and also the lesson of our volatile times. There is more courage and heroism in defying the human impulse, in taking the purposeful and painful steps to prepare for the unimaginable.”

Gladwell loves to tell what Christopher Chabris has termed "just-so stories," cute little fables counterintuitive and surprising enough to catch the eye but neat and simple enough to go down easy. Paradoxically, pulling off that sort of simplicity requires that the writer have a deep and subtle understanding of his or her subject. Simplifying a subject you don't understand never goes well.

Tuesday, November 21, 2017

Tuesday Tweets

One of my great, ongoing problems is what to do with ideas that I'm not ready to write up or which are not ready to be written. One of the wonderful things about a blog is that it's a welcoming environment for these not-ready-for-prime-time pieces. It's low-stakes and flexible both in terms of length and format. In other words, a great place to pin notions and notes.

That said, there are things too insubstantial even for a blog. That and the need for self-promotion are the main reasons I've taken to Twitter. The bar for a tweet is not so much low, as it is sitting on the ground. Nonetheless, there are a few that feel like they might be worth sharing, either because I liked how they turned out or in parentheses far more frequently) they cite some article that might be of interest to our regular readers.

That said, there are things too insubstantial even for a blog. That and the need for self-promotion are the main reasons I've taken to Twitter. The bar for a tweet is not so much low, as it is sitting on the ground. Nonetheless, there are a few that feel like they might be worth sharing, either because I liked how they turned out or in parentheses far more frequently) they cite some article that might be of interest to our regular readers.

The evillest place on earth. Disney's chilling response to a critical LA Times story. @jayrosen_nyu @Sulliview https://t.co/vUA6CwRtaL— Mark Palko (@MarkPalko1) November 4, 2017

Given that for years, John Tierney was the face of New York Times science writing, anything by Brad Plumer has to be a vast improvement. https://t.co/VjjfWcGKjs— Mark Palko (@MarkPalko1) November 4, 2017

Someone should do a dangers-of-concentrated-media-power on this and Disney's attack on the LA Times. @Sulliview @jayrosen_nyu https://t.co/3n5K4nCJEF— Mark Palko (@MarkPalko1) November 6, 2017

Keep in mind, YouTube is a monopoly/monopsony owned by a monopoly/monopsony with a history of abusing its power. https://t.co/WUwABkZp4h— Mark Palko (@MarkPalko1) November 6, 2017

GOP “tax reform” spin relies heavily on journalists not understanding how marginal rates work.— Mark Palko (@MarkPalko1) November 7, 2017

It is getting more and more difficult to tell whether a story came from New York Magazine or Goop. https://t.co/kAgOi2Sow3 via @strategist— Mark Palko (@MarkPalko1) November 7, 2017

Abuses of media monopoly power have been come increasingly flagrant and dangerous. Disney cannot be allowed to just walk away from this. https://t.co/82rCIScujZ— Mark Palko (@MarkPalko1) November 7, 2017

Monday, November 20, 2017

Australia citizenship furore

This is Joseph

Here is a case where they missed the main argument. In Australia a number of MPs have been disqualified because the constitution prohibits dual citizens from running for office. The referenced article asks why MPs will not be forced to repay their salaries when welfare debts are collected all of the time. Now I am not necessarily a great fan of putting in place debt collection on the most vulnerable. But that's not the real issue here.

The real issue is that you don't want to raise the stakes any higher. As it is, being disqualified is a terrible process for a politician to go through. Forcing the unexpectedly unemployed person to pay back hundreds of thousands of dollars can well add destitution to the consequences.

And, in some cases, this may be out of proportion to the crime. In some cases MPs were either sloppy, cavalier, or deceptive and this makes sense. These are not traits one would want. But how can you be sure you don't have citizenship somewhere? After all, many people have overseas links and it is often possible that a parent has taken actions that you are unaware of (estrangement does happen). Or, even more likely, is ambiguity like in the case of Susan Lamb. She might have UK citizenship but cannot prove it and so cannot renounce it.

In the case of these types of complex administrative cases, being punitive only makes a bad case worse. I am all in favor of rooting out corruption but this would appear to run some risks of making defiance the most viable strategy and I am not sure that ends well.

Here is a case where they missed the main argument. In Australia a number of MPs have been disqualified because the constitution prohibits dual citizens from running for office. The referenced article asks why MPs will not be forced to repay their salaries when welfare debts are collected all of the time. Now I am not necessarily a great fan of putting in place debt collection on the most vulnerable. But that's not the real issue here.

The real issue is that you don't want to raise the stakes any higher. As it is, being disqualified is a terrible process for a politician to go through. Forcing the unexpectedly unemployed person to pay back hundreds of thousands of dollars can well add destitution to the consequences.

And, in some cases, this may be out of proportion to the crime. In some cases MPs were either sloppy, cavalier, or deceptive and this makes sense. These are not traits one would want. But how can you be sure you don't have citizenship somewhere? After all, many people have overseas links and it is often possible that a parent has taken actions that you are unaware of (estrangement does happen). Or, even more likely, is ambiguity like in the case of Susan Lamb. She might have UK citizenship but cannot prove it and so cannot renounce it.

In the case of these types of complex administrative cases, being punitive only makes a bad case worse. I am all in favor of rooting out corruption but this would appear to run some risks of making defiance the most viable strategy and I am not sure that ends well.

Friday, November 17, 2017

That sense of living in a science fiction novel

As previously mentioned, I'm working on a project about technology. One of the central points is the ways we think and feel about technology -- the language, the attitudes, the mental frameworks -- were largely in place by the early 20th century, and became set in the post-war era.

When people internalize their reactions to abnormal conditions, unconsciously continue to respond to new things in old ways, The result is seldom good. We'll delve more into that later. For now, we're still in the anecdote and data gathering stage of the process, so here's an excellent example of how even the most sober observers of the period were so conscious of how astounding the times were that they explicitly described the advances of the time in terms of science fiction novels (even before the term "science fiction" had been coined).

From Scientific American May 17, 1890

When people internalize their reactions to abnormal conditions, unconsciously continue to respond to new things in old ways, The result is seldom good. We'll delve more into that later. For now, we're still in the anecdote and data gathering stage of the process, so here's an excellent example of how even the most sober observers of the period were so conscious of how astounding the times were that they explicitly described the advances of the time in terms of science fiction novels (even before the term "science fiction" had been coined).

From Scientific American May 17, 1890

We know that at the time of the first official experiment, the two navigators of the Goubet remained eight full hours under water without any other communication with the outside world than the telephone wire that they used to give their impressions, which, by the way, were of a cheerful character. When they made their appearance, they were fresh, well, and lively, having been able to attend to all the functions of life, and being ready to begin again. There was still in the tubes enough oxygen to last twenty hours.

It has been said of the Goubet that it is a realization of the dream conceived by Jules Verne in his "Twenty Thousand Leagues under the Sea;" but the Goubet is better than that. It is not only one romance, but it is rather two of the great amuser's romances amalgamated. It is both "Twenty Thousand Leagues under the Sea" and " Doctor Ox" in action!

Thursday, November 16, 2017

Tax policy

This is Joseph

There are two pieces of journalism by Kevin Drum that are worth not missing.

One, is his noting that some of the current tax reform plans are absurdly generous to inherited wealth:

But turning tax policy into a way to maximize the benefits of inherited wealth seems to an unnecessary enhancement, above and beyond the paperwork simplification. There should be one or the other.

Two, is his piece on how tax havens actually make income taxes regressive in Scandinavia. It's a good item for considering how much real tax burden there is among the elite (the one's who are getting a large tax cut, or potentially getting a large tax cut at least). You can make moral arguments about what is the right level of obligation that people have to support the nations and laws that make their wealth possible. It's a complicated area. But this does somewhat undermine the narrative that taxes at the topic are actually crushing.

There are two pieces of journalism by Kevin Drum that are worth not missing.

One, is his noting that some of the current tax reform plans are absurdly generous to inherited wealth:

If you’re not following what this means, here’s an example. Suppose you’re uber-rich and you buy $1 billion in Apple Stock. By the time you die it’s worth $3 billion. Your heirs, lucky ducks that they are, don’t have to pay estate tax on that $3 billion. But they do have to pay normal capital gains on the $2 billion appreciation in the Apple stock. At 20 percent that comes to $400 million.

However, the Republican bill eliminates that too. Not only does it eliminate the estate tax completely, but it allows you to “step up” the value of the estate and avoid capital gains taxes entirely. In our example, you literally get $3 billion free and clear, and you owe taxes in the future only on the appreciation above $3 billion.Now it is possible to decide that there should not be capital gains taxes. This is actually an area of policy discussion as Canada has had a lifetime capital gains exemption. But if this is what you would like to see happen then you should do it for all capital gains and not focus all of the benefit on the wealthy. And if you don't exempt these assets from capital gains then the paperwork can get messy. So, in a sense, the estate tax is a nice way to give the gift of less paperwork to recently bereaved families (not a bad thing).

But turning tax policy into a way to maximize the benefits of inherited wealth seems to an unnecessary enhancement, above and beyond the paperwork simplification. There should be one or the other.

Two, is his piece on how tax havens actually make income taxes regressive in Scandinavia. It's a good item for considering how much real tax burden there is among the elite (the one's who are getting a large tax cut, or potentially getting a large tax cut at least). You can make moral arguments about what is the right level of obligation that people have to support the nations and laws that make their wealth possible. It's a complicated area. But this does somewhat undermine the narrative that taxes at the topic are actually crushing.

Wednesday, November 15, 2017

What if shame works?

I've been meaning to do multiple posts on Patrick Radden Keefe's extraordinary article on how the Sackler family built a fortune by largely engineering the opioid crisis. You should definitely read the whole thing, but there are a number of interesting points that are worth singling out.

For instance, the role of social pressure in curbing bad corporate behavior.

There is something both sad and hopeful in this passage.

One of the overarching themes of this article is the way we are replaying the gilded age in increasingly obvious way. Specifically, the piece compares 19th century robber barons buying respectability with libraries and universities and today's billionaires doing much the same (though I think the comparison may be somewhat unfair to Carnegie and Stanford). This certainly applies to the Sackler family.

But if vanity and the pursuit of respectability can persuade plutocrats to give large chunks of money to good causes (or at least causes with the appearance of good), is it possible that fear of shame might dissuade these same people from doing bad things?

For instance, the role of social pressure in curbing bad corporate behavior.

Mike Moore, the former Mississippi attorney general, believes that the Sacklers will feel no pressure to emulate this gesture until more of the public becomes aware that their fortune is derived from the opioid crisis. Moore recalled his initial settlement conference with tobacco-company C.E.O.s: “We asked them, ‘What do you want?’ And they said, ‘We want to be able to go to cocktail parties and not have people come up and ask us why we’re killing people.’ That’s an exact quote.” Moore is puzzled that museums and universities are able to continue accepting money from the Sacklers without questions or controversy. He wondered, “What would happen if some of these foundations, medical schools, and hospitals started to say, ‘How many babies have become addicted to opioids?’ ” An addicted baby is now born every half hour. In places like Huntington, West Virginia, ten per cent of newborns are dependent on opioids. A district attorney in eastern Tennessee recently filed a lawsuit against Purdue, and other companies, on behalf of “Baby Doe”—an infant addict.

There is something both sad and hopeful in this passage.

One of the overarching themes of this article is the way we are replaying the gilded age in increasingly obvious way. Specifically, the piece compares 19th century robber barons buying respectability with libraries and universities and today's billionaires doing much the same (though I think the comparison may be somewhat unfair to Carnegie and Stanford). This certainly applies to the Sackler family.

But if vanity and the pursuit of respectability can persuade plutocrats to give large chunks of money to good causes (or at least causes with the appearance of good), is it possible that fear of shame might dissuade these same people from doing bad things?

Tuesday, November 14, 2017

If you believe in n-rays, believing in mental radio is not that heavy a lift

Another visit to the Scientific American collection of the Internet Archive.

Having spent a big chunk of the past few weeks reading over contemporary accounts of science and technology from the late 19th and early 20th centuries, I've come to the conclusion that, from our 21st century perspective, we tend to overestimate the credulousness of people at the turn-of-the-century while greatly underestimating our own gullibility

The key here is context, belief in something (or, more precisely, belief in the possibility of something) that turns out not to exist has to be judged based on the amount of evidence (or, more precisely, the amount of non-evidence) that had accumulated at the time. 150 years ago or so, sending expeditions to look for legendary mega-fauna was an entirely reasonable type of scientific investigation. 50 years ago, not so much. Today, not at all. Every time a researcher, preferably one who very much wants to find a phenomenon in question, comes up empty, the lack of result adds to the evidence that the phenomenon simply doesn't exist. Eventually, enough would be believers dig enough dry wells to make the nonexistence a confirmed scientific fact.

But there's another important difference between 1900 and 2017 that needs to be taken into account. Without understating the steady and impressive current flow of new ideas and discoveries, we now generally work under a relatively stable intellectual framework. We reject the possibility of telepathy in part because so many motivated researchers have failed to find it, but also because no one has proposed a plausible mechanism for it consistent with the other things we know with great confidence about the world.

The close of the 19th century was a time of great optimism and discovery, but as a consequence not one of great certainty. Science and technology were causing cataclysmic shifts in the way people viewed the world. When Einstein endorsed a book on mental telepathy and Tesla speculated that he had just received communications from Martians, they were working under a framework that was assumed to be changing and incomplete.

Coming shortly after the discovery of x-rays, n-rays did not initially seem all that implausible, and once you've accepted the possibility of a new kind of radiation that was related to biological and even mental activities, accepting ideas like "mental radio" and even spiritualism isn't that much of a reach. N-rays themselves were debunked fairly quickly (see below for the fascinating details), but the sense that everything you know can suddenly change remained a key part of the mentality of the period.

June 17, 1905

The debunking of n-rays is, itself a fascinating story. From Wikipedia.

Having spent a big chunk of the past few weeks reading over contemporary accounts of science and technology from the late 19th and early 20th centuries, I've come to the conclusion that, from our 21st century perspective, we tend to overestimate the credulousness of people at the turn-of-the-century while greatly underestimating our own gullibility

The key here is context, belief in something (or, more precisely, belief in the possibility of something) that turns out not to exist has to be judged based on the amount of evidence (or, more precisely, the amount of non-evidence) that had accumulated at the time. 150 years ago or so, sending expeditions to look for legendary mega-fauna was an entirely reasonable type of scientific investigation. 50 years ago, not so much. Today, not at all. Every time a researcher, preferably one who very much wants to find a phenomenon in question, comes up empty, the lack of result adds to the evidence that the phenomenon simply doesn't exist. Eventually, enough would be believers dig enough dry wells to make the nonexistence a confirmed scientific fact.

But there's another important difference between 1900 and 2017 that needs to be taken into account. Without understating the steady and impressive current flow of new ideas and discoveries, we now generally work under a relatively stable intellectual framework. We reject the possibility of telepathy in part because so many motivated researchers have failed to find it, but also because no one has proposed a plausible mechanism for it consistent with the other things we know with great confidence about the world.

The close of the 19th century was a time of great optimism and discovery, but as a consequence not one of great certainty. Science and technology were causing cataclysmic shifts in the way people viewed the world. When Einstein endorsed a book on mental telepathy and Tesla speculated that he had just received communications from Martians, they were working under a framework that was assumed to be changing and incomplete.

Coming shortly after the discovery of x-rays, n-rays did not initially seem all that implausible, and once you've accepted the possibility of a new kind of radiation that was related to biological and even mental activities, accepting ideas like "mental radio" and even spiritualism isn't that much of a reach. N-rays themselves were debunked fairly quickly (see below for the fascinating details), but the sense that everything you know can suddenly change remained a key part of the mentality of the period.

June 17, 1905

The debunking of n-rays is, itself a fascinating story. From Wikipedia.

The "discovery" excited international interest and many physicists worked to replicate the effects. However, the notable physicists Lord Kelvin, William Crookes, Otto Lummer, and Heinrich Rubens failed to do so. Following his own failure, self-described as "wasting a whole morning", the American physicist Robert W. Wood, who had a reputation as a popular "debunker" of nonsense during the period, was prevailed upon by the British journal Nature to travel to Blondlot's laboratory in France to investigate further. Wood suggested that Rubens should go since he had been the most embarrassed when Kaiser Wilhelm II of Germany asked him to repeat the French experiments, and then after two weeks Rubens had to report his failure to do so. Rubens, however, felt it would look better if Wood went, since Blondlot had been most polite in answering his many questions.

In the darkened room during Blondlot's demonstration, Wood surreptitiously removed an essential prism from the experimental apparatus, yet the experimenters still said that they observed N rays. Wood also stealthily swapped a large file that was supposed to be giving off N rays with an inert piece of wood, yet the N rays were still "observed". His report on these investigations were published in Nature, and they suggested that the N rays were a purely subjective phenomenon, with the scientists involved having recorded data that matched their expectations. There is reason to believe that Blondlot in particular was misled by his laboratory assistant, who confirmed all observations. By 1905, no one outside of Nancy believed in N rays, but Blondlot himself is reported to have still been convinced of their existence in 1926

Monday, November 13, 2017

Cutting-edge solar power circa 1904

Came across this while researching something else. It struck me as an interesting glimpse into the early days of solar power. More to the point, it had a cool picture.

Friday, November 10, 2017

Why do we still have cities?

Following up on "remembering the future."

Smart people, like statisticians' models, are often most interesting when they are wrong. There is no better example of this than Arthur C Clarke's 1964 predictions about the demise of the urban age, where he suggested that what we would now call telecommuting would end the need for people to congregate around centers of employment and would therefore mean the end of cities.

Clarke was working with a 20 to 50 year timeframe, so it's fair to say that he got this one wrong. The question is why. Both as a fiction writer and a serious futurist, the man was remarkably and famously prescient about telecommunications and its impact on society. Even here, he got many of the details right while still being dead wrong on the conclusion.

What went wrong? Part of this unquestionably has to do with the nature of modern work. Clarke probably envisioned a more automated workplace in the 21st century, one where stocking shelves and cleaning floors and, yes, driving vehicles would be done entirely by machines. He likely also underestimated the intrinsic appeal of cities.

But I think a third factor may well have been bigger than either of those two. The early 60s was an anxious but optimistic time. The sense was that if we didn't destroy ourselves, we were on the verge of great things. The 60s was also the last time that there was anything approaching a balance of power between workers and employers.

This was particularly true with mental work. At least in part because of the space race, companies like Texas Instruments were eager to find smart capable people. As a result, employers were extremely flexible about qualifications (a humanities PhD could actually get you a job) and they were willing to make concessions to attract and keep talented workers.

Telecommuting (as compared to off shoring, a distinction will need to get into in a later post) offers almost all of its advantages to the worker. The only benefit to the employer is the ability to land an otherwise unavailable prospect. From the perspective of 1964, that would have seemed like a good trade, but those days are long past.

For the past 40 or so years, employers have worked under (and now completely internalized) the assumption that they could pick and choose. When most companies post jobs, they are looking for someone who either has the exact academic background required, or preferably, someone who is currently doing almost the same job for a completely satisfied employer and yet is willing to leave for roughly the same pay.

When you hear complaints about "not being able to find qualified workers," it is essential to keep in mind this modern standard for "qualified." 50 or 60 years ago it meant someone who was capable of doing the work with a bit of training. Now it means someone who can walk in the door, sit down at the desk, and immediately start working. (Not to say that new employees will actually be doing productive work from day one. They'll be sitting in their cubicles trying to look busy for the first two or three weeks while IT and HR get things set up, but that's another story.)

Arthur C Clarke was writing in an optimistic age where workers were on an almost equal footing with management. If the year 2000 had looked like the year 1964, he just might have gotten this one right.

Smart people, like statisticians' models, are often most interesting when they are wrong. There is no better example of this than Arthur C Clarke's 1964 predictions about the demise of the urban age, where he suggested that what we would now call telecommuting would end the need for people to congregate around centers of employment and would therefore mean the end of cities.

What about the city of the day after tomorrow? Say, the year 2000. I think it will be completely different. In fact, it may not even exist at all. Oh, I'm not thinking about the atom bomb and the next Stone Age; I'm thinking about the incredible breakthrough which has been made possible by developments in communications, particularly the transistor and above all the communications satellite. These things will make possible a world where we can be in instant contact with each other wherever we may be, where we can contact our friends anywhere on earth even if we don't know their actual physical location. It will be possible in that age, perhaps only 50 years from now, for a man to conduct his business from Tahiti or Bali just as well as he could from London. In fact, if it proved worthwhile, almost any executive skill, any administrative skill, even any physical skill could be made independent of distance. I am perfectly serious when I suggest that someday we may have rain surgeons in Edinburgh operating on patients in New Zealand. When that time comes, the whole world will have shrunk to a point and the traditional role of a city as the meeting place for man will have ceased to make any sense. In fact, men will no longer commute; they will communicate. They won't have to travel for business anymore; they'll only travel for pleasure. I only hope that, when that day comes and the city is abolished, the whole world isn't turned into one giant suburb.

Clarke was working with a 20 to 50 year timeframe, so it's fair to say that he got this one wrong. The question is why. Both as a fiction writer and a serious futurist, the man was remarkably and famously prescient about telecommunications and its impact on society. Even here, he got many of the details right while still being dead wrong on the conclusion.

What went wrong? Part of this unquestionably has to do with the nature of modern work. Clarke probably envisioned a more automated workplace in the 21st century, one where stocking shelves and cleaning floors and, yes, driving vehicles would be done entirely by machines. He likely also underestimated the intrinsic appeal of cities.

But I think a third factor may well have been bigger than either of those two. The early 60s was an anxious but optimistic time. The sense was that if we didn't destroy ourselves, we were on the verge of great things. The 60s was also the last time that there was anything approaching a balance of power between workers and employers.

This was particularly true with mental work. At least in part because of the space race, companies like Texas Instruments were eager to find smart capable people. As a result, employers were extremely flexible about qualifications (a humanities PhD could actually get you a job) and they were willing to make concessions to attract and keep talented workers.

Telecommuting (as compared to off shoring, a distinction will need to get into in a later post) offers almost all of its advantages to the worker. The only benefit to the employer is the ability to land an otherwise unavailable prospect. From the perspective of 1964, that would have seemed like a good trade, but those days are long past.

For the past 40 or so years, employers have worked under (and now completely internalized) the assumption that they could pick and choose. When most companies post jobs, they are looking for someone who either has the exact academic background required, or preferably, someone who is currently doing almost the same job for a completely satisfied employer and yet is willing to leave for roughly the same pay.

When you hear complaints about "not being able to find qualified workers," it is essential to keep in mind this modern standard for "qualified." 50 or 60 years ago it meant someone who was capable of doing the work with a bit of training. Now it means someone who can walk in the door, sit down at the desk, and immediately start working. (Not to say that new employees will actually be doing productive work from day one. They'll be sitting in their cubicles trying to look busy for the first two or three weeks while IT and HR get things set up, but that's another story.)

Arthur C Clarke was writing in an optimistic age where workers were on an almost equal footing with management. If the year 2000 had looked like the year 1964, he just might have gotten this one right.

Subscribe to:

Posts (Atom)