Guess what. If you start a post in HTML view while embedding you tweets, then switch to compose view to edit, rearrange, and add comments, then switch back to HTML to add one more tweet, be careful not to hit control-z, because it will undo everything you did in compose and you can't control-y it back.

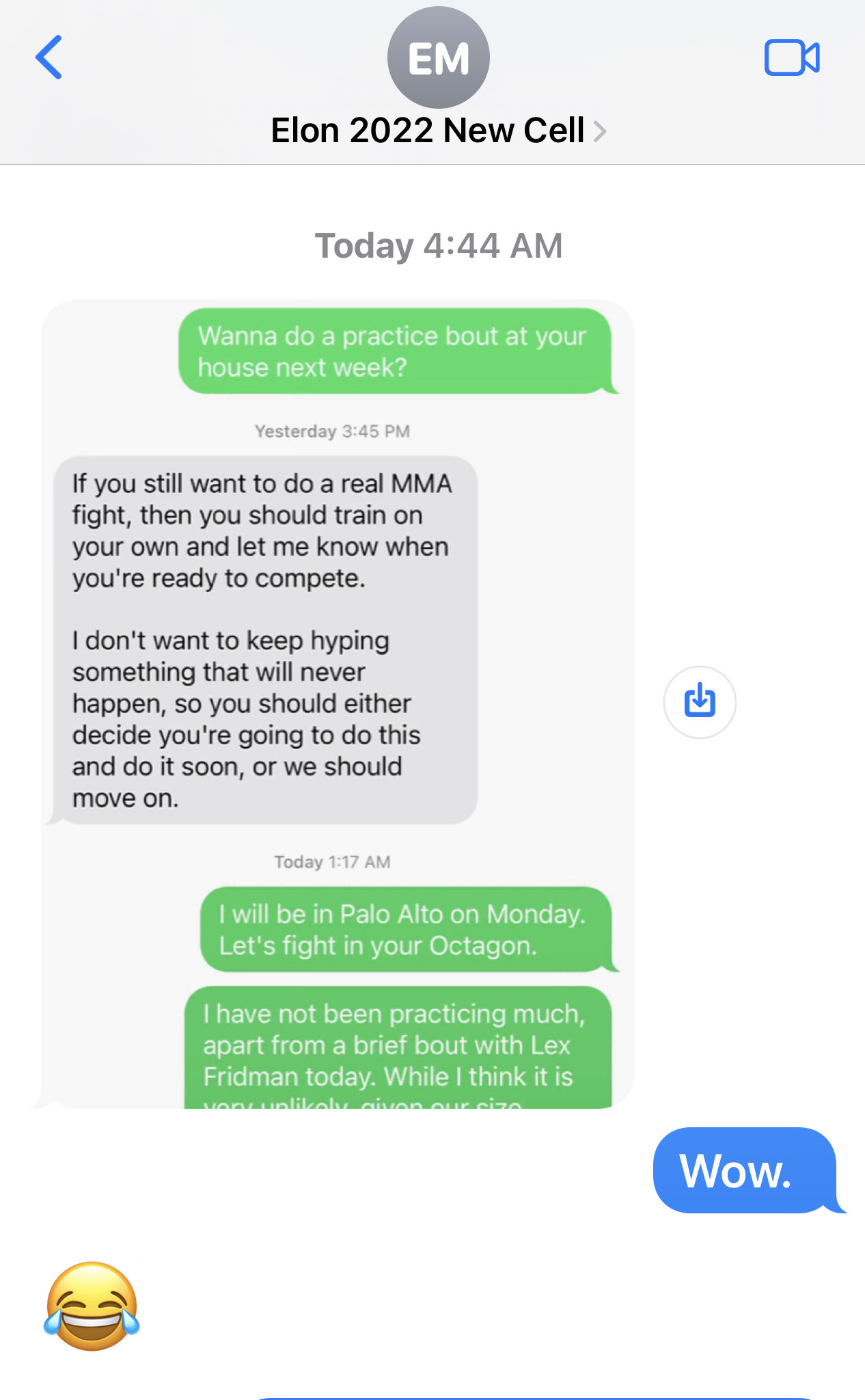

Let's start with the best show on Twitter... its owner trying to get out of fight club with some portion of his dignity.

this is truly the Canadian girlfriend of excuses pic.twitter.com/kyEHNFq0bf

— shauna (@goldengateblond) August 7, 2023

Hello, he lied. pic.twitter.com/Bfnl7El9nS

— Kara Swisher (@karaswisher) August 12, 2023

I suspect that Elon was hoping that Zuckerberg would play along and let him off the hook, but Zuck had apparently had enough.

So Musk is in "somebody hold me back" mode.

So does he need surgery or not? 🤡 pic.twitter.com/uwjETBLUNh

— LeGate (@williamlegate) August 12, 2023

In a quote tweet of this Walter Isaacson post, New York Times Pitchbot commented "One of America’s most respected journalists."

Imagine leaking this to your biographer and thinking it makes you look good https://t.co/kxQJJzGHhs

— Ryan Mac 🙃 (@RMac18) August 13, 2023

Blogger does strange things so just in case it decides to crop the tweets image, here's the original.

Elsewhere in the world of the site formerly known as Twitter.

3. DOJ was laser focused on getting ALL OF IT IMMEDIATELY. So urgently they asked to fine twitter 50k for day 1, 100k for day 2 and doubling every day.

— Stephanie Ruhle (@SRuhle) August 16, 2023

4. Twitter fought hard & spent tons of $ fighting on trump’s behalf.

Noteworthy-They had never before fought a subpoena in court

Elon Musk hired expensive lawyers to fight a lawful search warrant to defend Donald Trump’s interests.

— Renato Mariotti (@renato_mariotti) August 16, 2023

Twitter receives court orders like this regularly, and doesn’t spend that kind of money to fight them on behalf of customers.

Why the special treatment for Trump, @elonmusk? https://t.co/bNxYn6o69W

This guy nails it pic.twitter.com/f6vR7avQQn

— Stop Cop City (@JoshuaPHilll) August 12, 2023

Seguing to another member of the PayPal mafia,

Quite remarkable - note the violence proposed by Thiel in particular: https://t.co/14hloTaJtQ pic.twitter.com/p5zhjppvG9

— Critical AI : first issue coming in Aug. 23! :) (@CriticalAI) August 13, 2023

And bigger news.

Smith and Willis highlight the strength of our federalist system. Smith charged under federal law; Willis under state law. Though the facts overlap, not identical. Together, they bring a full accounting of Trump’s actions. She CANNOT ignore crime spree

— Jennifer Now at Threads Rubin (@JRubinBlogger) August 15, 2023

https://t.co/5hsbcmm2lW

How it started. How it’s going. pic.twitter.com/525NhndYp9

— New York Times Pitchbot (@DougJBalloon) August 15, 2023

Donald Trump was indicted yesterday and today's @nytimes front page gives it a third as much space as it devoted to the infamous Hillary's Email story ... and Trump's name isn't even in the headline pic.twitter.com/2VnH5rC1aB

— Jamison Foser (@jamisonfoser) August 15, 2023

Jeff Gerth is the same credulous, ignorant, ill-informed hack who brought us Whitewater. The NYT created him. It is poetic justice for them to now know how he treats his subjects.

The NYT was not "shocked" that Mueller's investigation closed with no "collusion" arrests. Dean Baquet did not actually say anything remotely like that. One of many instances in which the Gerth series is misleading, and right at the top. https://t.co/mZHt2r87iu pic.twitter.com/aNQWTut3yp

— Andrew Prokop (@awprokop) August 16, 2023

A typical times article. The simplest answer is that the House GOPs are full of shit and simply changed their position. And the article itself struggles not to come to that conclusion. Sad. https://t.co/MfeUffqupL

— Josh Marshall (@joshtpm) August 13, 2023

"Shock and outrage over the fall of Roe v. Wade has faded as confusion has spread, deflating Democrats’ hopes that the issue could carry them to victory"

Thinking about this headline from November 2022 for some reason. pic.twitter.com/NjKS1kkfqm

— Ammar Moussa (@ammarmufasa) August 9, 2023

Russian state propaganda outlets RT & Sputnik are both promoting RFK Jr’s comments about US biolabs in Ukraine, which he discussed on Tucker Carlson this week.

— Caroline Orr Bueno, Ph.D (@RVAwonk) August 16, 2023

The Tucker-to-Russia pipeline is among Russia’s most successful propaganda operations, so it’s worth paying attention. pic.twitter.com/VCcVKLh5lp

53% of Americans say they will definitely not vote for Trump in ‘24, with a further 11% saying they probably won’t. Here’s why that’s bad news for Joe Biden.

— New York Times Pitchbot (@DougJBalloon) August 16, 2023

"Oops, I made a mistake" doesn't entirely set things right for helping bring the country to the verge of fascism.

You’re ridiculous. My goodness….

— Eddie S. Glaude Jr. (@esglaude) August 15, 2023

That’s him.

— yvette nicole brown (@YNB) August 15, 2023

Because when you're defending your decision to spend big money addressing climate change, the last thing you want people talking about is climate change.

This you, ma’am? pic.twitter.com/Yxxd6K8Ku7

— Charles Gaba isn't paying for this account. (@charles_gaba) August 17, 2023

Desantis issues a pathetic, ridiculous statement in response to the Trump indictment, bragging that he suspended two DAs in FL, and “as president, we will lean in on some of these local prosecutors.” pic.twitter.com/92O9ZjaXl9

— Ron Filipkowski (@RonFilipkowski) August 15, 2023

I'm a little more bullish on RD than Frum, but it is amazing how the consensus has shifted.

DeSantis laying the groundwork to end his campaign and endorse Trump. https://t.co/x5xHLLYwSO

— David Frum (@davidfrum) August 13, 2023

Normally, it's not the defendant who has the option of 'moving on'

Let’s just move on, Bob. Forget the whole thing. What do you say, pal?

— Ron Filipkowski (@RonFilipkowski) August 14, 2023

Isn’t the campaign slogan, ‘Never Back Down”? pic.twitter.com/j4yoaM1C8Z

Nikki Haley is mobbed by fans and supporters. pic.twitter.com/7LRGeGvNFG

— Ron Filipkowski (@RonFilipkowski) August 12, 2023

Basically, Dean Phillips just wants attention.

Every person on this list is a Biden supporter. They aren’t running against @JoeBiden because they are working to get him re-elected. Let’s stop the pot stirring hype. https://t.co/C6bwUjXlhC

— Ronald Klain (@RonaldKlain) August 13, 2023

🚨Maria Bartiromo spoke about the Covid-19 pandemic with Sen. Ron Johnson (R-WI) on Friday morning.

— The Intellectualist (@highbrow_nobrow) August 12, 2023

Johnson claimed the pandemic was “all pre-planned by an elite group of people” as part of an ongoing scheme to take away Americans’ freedoms. @Mediaitehttps://t.co/20GxfHuJO8

We've said before that Loeb has been feeding his considerable reputation into the woodchipper. Now he's found the best network for it.

Avi Loeb on extraterrestrial civilizations

— UAP James (@UAPJames) August 11, 2023

“You can imagine that a superhuman civilization that understands how to unify quantum mechanics and gravity might actually be able to create a baby universe in the laboratory.

A quality that we assign to God.”#ufotwitter #ufox #ufo pic.twitter.com/7zb2LnonoS

The Internet Archive does good, important work, particularly as preservationists. I give them money. You should too.

Now the Washington lawyers want to destroy digital collections of scratchy 78rpm records, 70-120 year old, built by dedicated preservationists online since 2006.

— Brewster Kahle (@brewster_kahle) August 13, 2023

Who benefits?https://t.co/xjEqLdZi7n

A lot at stake in this lawsuit! If the rightsholders lose, what incentive will there be for Bing Crosby and Duke Ellington to make any more records? https://t.co/kpujXeDzrX

— Stian Westlake (@stianwestlake) August 12, 2023

Adventures in AI

Fantastic. pic.twitter.com/jBTjRqlmSF

— Ben Collins (@oneunderscore__) August 15, 2023

Going into exploitation mode (scaling up what works) can give you the illusion of fast progress when you're looking at the wrong metrics. Like "solving" air travel by forever scaling up a hydrogen zeppelin. It may seem like you can cover ever greater distances, but you're still…

— François Chollet (@fchollet) August 12, 2023

One of the ways in which the web is like an ecosystem is that a synthetic text spill in one part of it can leak into others. Here, someone has posted ChatGPT output (unclear to what end) and the Google indexed it: pic.twitter.com/EFO4N21Zek

— @emilymbender@dair-community.social on Mastodon (@emilymbender) August 16, 2023

Today's case study in: If you're going to set up a synthetic media machine where anyone can access it, you'll need to account for people throwing all kinds of random stuff at it.

— @emilymbender@dair-community.social on Mastodon (@emilymbender) August 12, 2023

>>https://t.co/m19z9rxHi0

I was surprised by a talk Yejin Choi (an NLP expert) gave yesterday in Berkeley, on some surprising weaknesses of GPT4:

— Alex Dimakis (@AlexGDimakis) August 16, 2023

As many humans know, 237*757=179,409

but GPT4 said 179,289.

For the easy problem of multiplying two 3 digit numbers, they measured GPT4 accuracy being only… pic.twitter.com/kp3TDBaWId

Notes from academia.

Here's the group that is dismantling WVU.

— Dr. Lisa Corrigan (@DrLisaCorrigan) August 12, 2023

They have been hired as consultants at land-grant universities around the country to help shred the liberal arts. Would be sensible for people to know who they are, as more unis hire them:https://t.co/u6Ic02EtOH

Again: they are not only coming after the Marxist Queer Critical Theory people. The goal is to strip public university systems to the bone in their entirety. They don’t care that these are load bearing walls for the state’s economy, it owns the libs. https://t.co/dwXxNhl4D7

— William B. Fuckley (@opinonhaver) August 11, 2023

Data Colada have collectively volunteered thousands of hours to improve science. Unfortunately, sometimes when you scrutinize the work of powerful people...they sue you. Together, we can stand up for science and help them defend themselves in court. https://t.co/S3GgLhmqAO

— Yoel Inbar (@yorl) August 16, 2023

And in closing...

In a series of events, all of which had been a bit thick, this, in his opinion, achieved the maximum of thickness.

— Wodehouse Tweets (@inimitablepgw) August 16, 2023

Hi, Mark:

ReplyDeleteI'm always happy to talk about LLMs. So much so I'm tempted to to full circle and jump out of Bayesian stats and back into natural language processing.

I'm a bit perplexed by everyone's skepticism. I understand that an LLM like ChatGPT is neither embodied nor is it learning language in the same way as a human.

The first talk I saw Geoff Hinton give (early 90s, CMU psych dept), was about how neural networks captured the "U-shaped learning curve" where children learn to mimic, then overgeneralize, then learn exceptions. I don't think anyone's done this kind of analysis for transformers. Human children learn language with exposure to far fewer tokens of language than GPT. And they do it interactively in the real world. By the time children have acquired language, they typically control tens of thousands of words from one or two languages. By the time ChatGPT is trained, it can employ hundreds of thousands of words in hundreds of languages. It's also superhuman in that it overcomes our pesky attention limits, as seen in constructions like center embeddings. In sequences like "the mouse ran", "the mouse the cat chased ran", "the mouse the cat the dog bit chased ran", humans get overwhelemed about here, but ChatGPT merrily deconstructs the right relationships five or six deep.

Does ChatGPT understand language? Certainly not in the same way as a person. In-context learning only goes so far and it's basically stuck at whatever it was pretrained on (the "P" in "GPT"). A simpler question is whether AlphaGo understands Go. Again, not in the same way as a person. But AlphaGo is interesting in that it came up with novel strategies from which humans learned. GPT can also generate novel moves in language in the sense that it's generating new sentences. Is it just monkeys at a typewriter? If so, they're damn lucky monkeys who act an awful lot like someone who understood the chat dialogue.

(too long to fit in one comment!). To answer the question more precisely, we have to be more precise about the semantics of the word "understand". How are you going to define it as something other than "what people do with their meat-based brains" that excludes something like ChatGPT? That's tricky and why I was urging people to check out Steven Piantadosi's tallk at the LLM workshop on Berkeley titled "Meaning in the age of large language models". He starts us down the postmodern road of undertanding language and concepts as a network of associations. The reason this is relevant is that philosophers (and to some extent cognitive scientists) spent the 20th century trying to unpack what "embodied" and "understand" and "perception" mean.

ReplyDeleteAnother way to think about this is in terms of interpolation and extrapolation. I hear a lot of "ML can only interpolate" talk, but I'm not sure what it means in high dimensions, where none of the outputs are going to land in the convex hull defined by the training data. I hear a lot of "it's a stochastic parrot", but the parrots I've seen all have a limited repetoire of fixed phrases. ChatGPT almost never says the same thing twice (unless it's trying to dodge answering---then it's annoyingly repetetive).

Whether or not these LLMs understand according to some fine-grained definition, I'm finding them incredibly useful. I'm mainly using GPT-4 to (a) generate code for munging data and graphics in Python, (b) generating suggestions for role-playing games and generating random tables and magic item tables, (c) helping me rewrite things I'm having trouble phrasing clearly, and (d) tutoring me in math and science subjects.

For (a), it's better at these tasks than I am---I can never remember how to draw a thick vertical line of a given color at a given x-axis position. It can even take descriptions of outputs and genrate Tikz graphics instructions in LaTeX for me or convert markdown tables to LaTeX tables or vice-versa. For (b), I'd say it's incredibly productively creative at brainstorming. Here, I don't think it comes up with ideas that are as novel as those a really talented and creative person would come up with, but it's better than me at it. It's good for technical uses when you say you're writing a textbook and ask what it thinks you should cover (at any level of granularity). For (c), the whole reason I'm using it is that I can't figure out how to say something clearly and it reorganizes everything and states it clearly. For (d), it can be downright amazing. I've used it to teach me about biases in RNA sequencing, about measure-theoretic approaches to proving MCMC convergence, and pretty much anything that comes up that I might have Googled in the past and had to read through technical articles to understand. It's also great at converting notation. I've heard a lot of reports about how much people like it as a tutor because of its patience in answering streams of "why?" questions.

What is it not so good at? I tried designing a whole dungeon, but it needs to be constantly reminded of context. It never really "learns" in context and the attention models are only so good long distance. It's terrible at Stan code beyond a few trivial examples. When I get into edge statistical topics like the infinitesimal jackknife, it starts confusing the math and the algorithm (though its top level descriptions of what it is and why its used are spot on). I also can't coax it into writing at a really high quality level, like say a good textbook or the New Yorker (though ask it to give you the outline and plot for five Booker-prize-winning novels and you'll see that it totally gets genre vs. literature)---it just can't write the books at that level.